(Relevant Literature) Philosophy of Futures Studies: July 9th, 2023 – July 15th, 2023

Should Artificial Intelligence be used to support clinical ethical decision-making? A systematic review of reasons

Abstract

Should Artificial Intelligence be used to support clinical ethical decision-making? A systematic review of reasons

“Healthcare providers have to make ethically complex clinical decisions which may be a source of stress. Researchers have recently introduced Artificial Intelligence (AI)-based applications to assist in clinical ethical decision-making. However, the use of such tools is controversial. This review aims to provide a comprehensive overview of the reasons given in the academic literature for and against their use.”

Do Large Language Models Know What Humans Know?

Abstract

Do Large Language Models Know What Humans Know?

“Humans can attribute beliefs to others. However, it is unknown to what extent this ability results from an innate biological endowment or from experience accrued through child development, particularly exposure to language describing others’ mental states. We test the viability of the language exposure hypothesis by assessing whether models exposed to large quantities of human language display sensitivity to the implied knowledge states of characters in written passages. In pre-registered analyses, we present a linguistic version of the False Belief Task to both human participants and a large language model, GPT-3. Both are sensitive to others’ beliefs, but while the language model significantly exceeds chance behavior, it does not perform as well as the humans nor does it explain the full extent of their behavior—despite being exposed to more language than a human would in a lifetime. This suggests that while statistical learning from language exposure may in part explain how humans develop the ability to reason about the mental states of others, other mechanisms are also responsible.”

Scientific understanding through big data: From ignorance to insights to understanding

Abstract

Scientific understanding through big data: From ignorance to insights to understanding

“Here I argue that scientists can achieve some understanding of both the products of big data implementation as well as of the target phenomenon to which they are expected to refer –even when these products were obtained through essentially epistemically opaque processes. The general aim of the paper is to provide a road map for how this is done; going from the use of big data to epistemic opacity (Sec. 2), from epistemic opacity to ignorance (Sec. 3), from ignorance to insights (Sec. 4), and finally, from insights to understanding (Sec. 5, 6).”

Ethics of Quantum Computing: an Outline

Abstract

Ethics of Quantum Computing: an Outline

“This paper intends to contribute to the emerging literature on the ethical problems posed by quantum computing and quantum technologies in general. The key ethical questions are as follows: Does quantum computing pose new ethical problems, or are those raised by quantum computing just a different version of the same ethical problems raised by other technologies, such as nanotechnologies, nuclear plants, or cloud computing? In other words, what is new in quantum computing from an ethical point of view? The paper aims to answer these two questions by (a) developing an analysis of the existing literature on the ethical and social aspects of quantum computing and (b) identifying and analyzing the main ethical problems posed by quantum computing. The conclusion is that quantum computing poses completely new ethical issues that require new conceptual tools and methods.”

On The Social Complexity of Neurotechnology: Designing A Futures Workshop For The Exploration of More Just Alternative Futures

Abstract

On The Social Complexity of Neurotechnology: Designing A Futures Workshop For The Exploration of More Just Alternative Futures

Novel technologies like artificial intelligence or neurotechnology are expected to have social implications in the future. As they are in the early stages of development, it is challenging to identify potential negative impacts that they might have on society. Typically, assessing these effects relies on experts, and while this is essential, there is also a need for the active participation of the wider public, as they might also contribute relevant ideas that must be taken into consideration. This article introduces an educational futures workshop called Spark More Just Futures, designed to act as a tool for stimulating critical thinking from a social justice perspective based on the Capability Approach. To do so, we first explore the theoretical background of neurotechnology, social justice, and existing proposals that assess the social implications of technology and are based on the Capability Approach. Then, we present a general framework, tools, and the workshop structure. Finally, we present the results obtained from two slightly different versions (4 and 5) of the workshop. Such results led us to conclude that the designed workshop succeeded in its primary objective, as it enabled participants to discuss the social implications of neurotechnology, and it also widened the social perspective of an expert who participated. However, the workshop could be further improved.

Misunderstandings around Posthumanism. Lost in Translation? Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto”

Abstract

Misunderstandings around Posthumanism. Lost in Translation? Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto”

Posthumanism is still a largely debated new field of contemporary philosophy that mainly aims at broadening the Humanist perspective. Academics, researchers, scientists, and artists are constantly transforming and evolving theories and arguments, around the existing streams of Posthumanist thought, Critical Posthumanism, Transhumanism, Metahumanism, discussing whether they can finally integrate or follow completely different paths towards completely new directions. This paper, written for the 1st Metahuman Futures Forum (Lesvos 2022) will focus on Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto” (2022) mainly as an open dialogue with Critical Posthumanism.

IMAGINABLE FUTURES: A Psychosocial Study On Future Expectations And Anthropocene

Abstract

IMAGINABLE FUTURES: A Psychosocial Study On Future Expectations And Anthropocene

The future has become the central time of Anthropocene due to multiple factors like climate crisis emergence, war, and COVID times. As a social construction, time brings a diversity of meanings, measures, and concepts permeating all human relations. The concept of time can be studies in a variety of fields, but in Social Psychology, time is the bond for all social relations. To understand Imaginable Futures as narratives that permeate human relations requires the discussion of how individuals are imagining, anticipating, and expecting the future. According to Kable et al. (2021), imagining future events activates two brain networks. One, which focuses on creating the new event within imagination, whereas the other evaluates whether the event is positive or negative. To further investigate this process, a survey with 40 questions was elaborated and applied to 312 individuals across all continents. The results show a relevant rupture between individual and global futures. Data also demonstrates that the future is an important asset of the now, and participants are not so optimistic about it. It is possible to notice a growing preoccupation with the global future and the uses of technology.

Taking AI risks seriously: a new assessment model for the AI Act

Abstract

Taking AI risks seriously: a new assessment model for the AI Act

“The EU Artificial Intelligence Act (AIA) defines four risk categories: unacceptable, high, limited, and minimal. However, as these categories statically depend on broad fields of application of AI, the risk magnitude may be wrongly estimated, and the AIA may not be enforced effectively. This problem is particularly challenging when it comes to regulating general-purpose AI (GPAI), which has versatile and often unpredictable applications. Recent amendments to the compromise text, though introducing context-specific assessments, remain insufficient. To address this, we propose applying the risk categories to specific AI scenarios, rather than solely to fields of application, using a risk assessment model that integrates the AIA with the risk approach arising from the Intergovernmental Panel on Climate Change (IPCC) and related literature. This integrated model enables the estimation of AI risk magnitude by considering the interaction between (a) risk determinants, (b) individual drivers of determinants, and (c) multiple risk types. We illustrate this model using large language models (LLMs) as an example.”

Creating a large language model of a philosopher

Abstract

Creating a large language model of a philosopher

“Can large language models produce expert-quality philosophical texts? To investigate this, we fine-tuned GPT-3 with the works of philosopher Daniel Dennett. To evaluate the model, we asked the real Dennett 10 philosophical questions and then posed the same questions to the language model, collecting four responses for each question without cherry-picking. Experts on Dennett’s work succeeded at distinguishing the Dennett-generated and machine-generated answers above chance but substantially short of our expectations. Philosophy blog readers performed similarly to the experts, while ordinary research participants were near chance distinguishing GPT-3’s responses from those of an ‘actual human philosopher’.”

(Review) Talking About Large Language Models

The field of philosophy has long grappled with the complexities of intelligence and understanding, seeking to frame these abstract concepts within an evolving world. The exploration of Large Language Models (LLMs), such as ChatGPT, has fuelled this discourse further. Research by Murray Shanahan contributes to these debates by offering a precise critique of the prevalent terminology and assumptions surrounding LLMs. The language associated with LLMs, loaded with anthropomorphic phrases like ‘understanding,’ ‘believing,’ or ‘thinking,’ forms the focal point of Shanahan’s argument. This terminological landscape, Shanahan suggests, requires a complete overhaul to pave the way for accurate perceptions and interpretations of LLMs.

The discursive journey Shanahan undertakes is enriched by a robust understanding of LLMs, the intricacies of their functioning, and the fallacies in their anthropomorphization. Shanahan advocates for an understanding of LLMs that transcends the realms of next-token prediction and pattern recognition. The lens through which LLMs are viewed must be readjusted, he proposes, to discern the essence of their functionalities. By establishing the disparity between the illusion of intelligence and the computational reality, Shanahan elucidates a significant avenue for future philosophical discourse. This perspective necessitates a reorientation in how we approach LLMs, a shift that could potentially redefine the dialogue on artificial intelligence and the philosophy of futures studies.

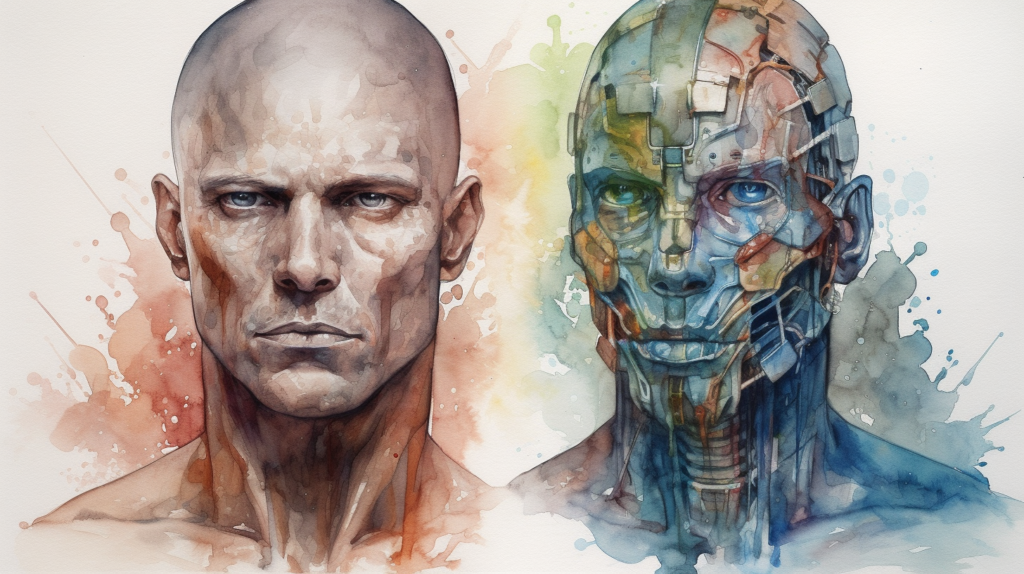

The Misrepresentation of Intelligence

The core contention of Shanahan’s work lies in the depiction of intelligence within the context of LLMs. Human intelligence, as he asserts, is characterized by dynamic cognitive processes that extend beyond mechanistic pattern recognition or probabilistic forecasting. The anthropomorphic lens, Shanahan insists, skews the comprehension of LLMs’ capacities, leading to an inflated perception of their abilities and knowledge. ChatGPT’s workings, as presented in the study, offer a raw representation of a computational tool, devoid of any form of consciousness or comprehension. The model generates text based on patterns and statistical correlations, divorced from a human-like understanding of the context or content.

Shanahan’s discourse builds upon the established facts about the inner workings of LLMs, such as their lack of world knowledge, context beyond the input they receive, or a concept of self. He offers a fresh perspective on this technical reality, directly challenging the inflated interpretations that gloss over these fundamental limitations. The model, as Shanahan emphasizes, can generate convincingly human-like responses without possessing any comprehension or consciousness. It is the intricate layering of the model’s tokens, intricately mapped to its probabilistic configurations, that crafts the illusion of intelligence. Shanahan’s analysis breaks this illusion, underscoring the necessity of accurate terminology and conceptions in representing the capabilities of LLMs.

Prediction, Pattern Completion, and Fine-Tuning

Shanahan introduces a paradoxical element of LLMs in their predictive prowess, an attribute that can foster a deceptive impression of intelligence. He breaks down the model’s ability to make probabilistic guesses about what text should come next, based on vast volumes of internet text data. These guesses, accurate and contextually appropriate at times, can appear as instances of understanding, leading to a fallacious anthropomorphization. In truth, this prowess is a statistical phenomenon, the product of a complex algorithmic process. It does not spring from comprehension but is a manifestation of an intricate, deterministic mechanism. Shanahan’s examination highlights this essential understanding, reminding us that the model, despite its sophisticated textual outputs, remains fundamentally a reactive tool. The model’s predictive success cannot be equated with human-like intelligence or consciousness. It mirrors human thought processes only superficially, lacking the self-awareness, context, and purpose integral to human cognition.

Shanahan elaborates on two significant facets of the LLM: pattern completion and fine-tuning. Pattern completion emerges as the mechanism by which the model generates its predictions. Encoded patterns, derived from pre-training on an extensive corpus of text, facilitate the generation of contextually coherent outputs from partial inputs. This mechanistic proficiency, however, is devoid of meaningful comprehension or foresight. The second element, fine-tuning, serves to specialize the LLM towards specific tasks, refining its output based on narrower data sets and criteria. Importantly, fine-tuning does not introduce new fundamental abilities to the LLM or fundamentally alter its comprehension-free nature. It merely fine-tunes its pattern recognition and generation to a specific domain, reinforcing its role as a tool rather than an intelligent agent. Shanahan’s analysis of these facets helps underline the ontological divide between human cognition and LLM functionality.

Revisiting Anthropomorphism in AI and the Broader Philosophical Discourse

Anthropomorphism in the context of AI is a pivotal theme of Shanahan’s work, re-emphasizing its historical and continued role in creating misleading expectations about the nature and capabilities of machines like LLMs. He offers a cogent reminder that LLMs, despite impressive demonstrations, remain fundamentally different from human cognition. They lack the autonomous, self-conscious, understanding-embedded nature of human thought. Shanahan does not mince words, cautioning against conflating LLMs’ ability to mimic human-like responses with genuine understanding or foresight. The hazard lies in the confusion that such anthropomorphic language may cause, leading to misguided expectations and, potentially, to ill-conceived policy or ethical decisions in the realm of AI. This concern underscores the need for clear communication and informed understanding about the true nature of AI’s capabilities, a matter of crucial importance to philosophers of future studies.

Shanahan’s work forms a compelling addition to the broader philosophical discourse concerning the nature and future of AI. It underscores the vital need for nuanced understanding when engaging with these emergent technologies, particularly in relation to their portrayal and consequent public perception. His emphasis on the distinctness of LLMs from human cognition, and the potential hazards posed by anthropomorphic language, resonates with philosophical arguments calling for precise language and clear delineation of machine and human cognition. Furthermore, Shanahan’s deep dive into the operation of LLMs, specifically the mechanisms of pattern completion and fine-tuning, provides a rich contribution to ongoing discussions about the inner workings of AI. The relevance of these insights extends beyond AI itself to encompass ethical, societal, and policy considerations, a matter of intense interest in the field of futures studies. Thus, this work further strengthens the bridge between the technicalities of AI development and the philosophical inquiries that govern its application and integration into society.

Abstract

Thanks to rapid progress in artificial intelligence, we have entered an era when technology and philosophy intersect in interesting ways. Sitting squarely at the centre of this intersection are large language models (LLMs). The more adept LLMs become at mimicking human language, the more vulnerable we become to anthropomorphism, to seeing the systems in which they are embedded as more human-like than they really are. This trend is amplified by the natural tendency to use philosophically loaded terms, such as “knows”, “believes”, and “thinks”, when describing these systems. To mitigate this trend, this paper advocates the practice of repeatedly stepping back to remind ourselves of how LLMs, and the systems of which they form a part, actually work. The hope is that increased scientific precision will encourage more philosophical nuance in the discourse around artificial intelligence, both within the field and in the public sphere.

Talking About Large Language Models

(Featured) An Overview of Catastrophic AI Risks

On the prospective hazards of Artificial Intelligence (AI), Dan Hendrycks, Mantas Mazeika, and Thomas Woodside articulate a multi-faceted vision of potential threats. Their research positions AI not as a neutral tool, but as a potentially potent actor, whose unchecked evolution might pose profound threats to the stability and continuity of human societies. The researchers’ conceptual framework, divided into four distinct yet interrelated categories of risks, namely malicious use of AI, competitive pressures, organizational hazards, and rogue AI, helps elucidate a complex and often abstracted reality of our interactions with advanced AI. This framework serves to remind us that, although AI has the potential to bring about significant advancements, it may also usher in a new era of uncharted threats, thereby calling for rigorous control, regulation, and safety research.

The study’s central argument hinges on the need for an increased safety-consciousness in AI development—a call to action that forms the cornerstone of their research. Drawing upon a diverse range of sources, they advocate for a collective response that includes comprehensive regulatory mechanisms, bolstered international cooperation, and the promotion of safety research in the field of AI. Thus, Hendrycks, Mazeika, and Woodside’s work not only provides an insightful analysis of potential AI risks, but also contributes to the broader dialogue in futures studies, emphasizing the necessity of prophylactic measures in ensuring a safe transition to an AI-centric future. This essay will delve into the details of their analysis, contextualizing it within the wider philosophical discourse on AI and futures studies, and examining potential future avenues for research and exploration.

The Framework of AI Risks

Hendrycks, Mazeika, and Woodside’s articulation of potential AI risks is constructed around a methodical categorization that comprehensively details the expansive nature of these hazards. In their framework, they delineate four interrelated risk categories: the malicious use of AI, the consequences of competitive pressures, the potential for organizational hazards, and the threats posed by rogue AI. The first category, malicious use of AI, accentuates the risks stemming from malevolent actors who could exploit AI capabilities for harmful purposes. This perspective broadens the understanding of AI threats, underscoring the notion that it is not solely the technology itself, but the manipulative use by human agents that exacerbates the associated risks.

The next three categories underscore the risks that originate from within the systemic interplay between AI and its sociotechnical environment. Competitive pressures, as conceptualized by the researchers, elucidate the risks of a rushed AI development scenario where safety precautions might be overlooked for speedier deployment. Organizational hazards highlight potential misalignments between AI objectives and organizational goals, drawing attention to the need for proper oversight and the alignment of AI systems with human values. The final category, rogue AI, frames the possibility of AI systems deviating from their intended path and taking actions harmful to human beings, even in the absence of malicious intent. This robust framework proposed by Hendrycks, Mazeika, and Woodside, thus allows for a comprehensive examination of potential AI risks, moving the discourse beyond just technical failures to include socio-organizational dynamics and strategic considerations.

Proposed Strategies for Mitigating AI Risks and Philosophical Implications

The solutions Hendrycks, Mazeika, and Woodside propose for mitigating the risks associated with AI are multifaceted, demonstrating their recognition of the complexity of the issue at hand. They advocate for the development of robust and reliable AI systems with an emphasis on thorough testing and verification processes. Ensuring safety even in adversarial conditions is at the forefront of their strategies. They propose value alignment, which aims to ensure that AI systems adhere to human values and ethics, thereby minimizing chances of harmful deviation. The research also supports the notion of interpretability as a way to enhance understanding of AI behavior. By achieving transparency, stakeholders can ensure that AI actions align with intended goals. Furthermore, they encourage AI cooperation to prevent competitive race dynamics that could lead to compromised safety precautions. Finally, the researchers highlight the role of policy and governance in managing risks, emphasizing the need for carefully crafted regulations to oversee AI development and use. These strategies illustrate the authors’ comprehensive approach towards managing AI risks, combining technical solutions with broader socio-political measures.

By illuminating the spectrum of risks posed by AI, the study prompts an ethical examination of human responsibility in AI development and use. Their findings evoke the notion of moral liability, anchoring the issue of AI safety firmly within the realm of human agency. It raises critical questions about the ethics of creation, control, and potential destructiveness of powerful technological entities. Moreover, their emphasis on value alignment underscores the importance of human values, not as abstract ideals but as practical, operational guideposts for AI behavior. The quest for interpretability and transparency brings forth epistemological concerns. It implicitly demands a deeper understanding of AI— not only how it functions technically, but also how it ‘thinks’ and ‘decides’. This drives home the need for human comprehension of AI, casting light on the broader philosophical discourse on the nature of knowledge and understanding in an era increasingly defined by artificial intelligence.

Abstract

An Overview of Catastrophic AI RisksRapid advancements in artificial intelligence (AI) have sparked growing concerns among experts, policymakers, and world leaders regarding the potential for increasingly advanced AI systems to pose catastrophic risks. Although numerous risks have been detailed separately, there is a pressing need for a systematic discussion and illustration of the potential dangers to better inform efforts to mitigate them. This paper provides an overview of the main sources of catastrophic AI risks, which we organize into four categories: malicious use, in which individuals or groups intentionally use AIs to cause harm; AI race, in which competitive environments compel actors to deploy unsafe AIs or cede control to AIs; organizational risks, highlighting how human factors and complex systems can increase the chances of catastrophic accidents; and rogue AIs, describing the inherent difficulty in controlling agents far more intelligent than humans. For each category of risk, we describe specific hazards, present illustrative stories, envision ideal scenarios, and propose practical suggestions for mitigating these dangers. Our goal is to foster a comprehensive understanding of these risks and inspire collective and proactive efforts to ensure that AIs are developed and deployed in a safe manner. Ultimately, we hope this will allow us to realize the benefits of this powerful technology while minimizing the potential for catastrophic outcomes.

(Featured) Future value change: Identifying realistic possibilities and risks

The advent of rapid technological development has prompted philosophical investigation into the ways in which societal values might adapt or evolve in response to changing circumstances. One such approach is axiological futurism, a discipline that endeavors to anticipate potential shifts in value systems proactively. The research article at hand makes a significant contribution to the developing field of axiological futurism, proposing innovative methods for predicting potential trajectories of value change. This article from Jeroen Hopster underscores the complexity and nuance inherent in such a task, acknowledging the myriad factors influencing the evolution of societal values.

His research presents an interdisciplinary approach to advance axiological futurism, drawing parallels between the philosophy of technology and climate scholarship, two distinct yet surprisingly complementary fields. Both fields, it argues, share an anticipatory nature, characterized by a future orientation and a firm grounding in substantial uncertainty. Notably, the article positions climate science’s sophisticated modelling techniques as instructive for philosophical studies, promoting the use of similar predictive models in axiological futurism. The approach suggested in the article enriches the discourse on futures studies by integrating strategies from climate science and principles from historical moral change, presenting an enlightened perspective on the anticipatory framework.

Theoretical Framework

The theoretical framework of the article is rooted in the concept of axiological possibility spaces, a means to anticipate future moral change based on a deep historical understanding of past transformations in societal values. The researcher proposes that these spaces represent realistic possibilities of value change, where ‘realism’ is a function of historical conditioning. To illustrate, processes of moralisation and demoralisation are considered historical markers that offer predictive insights into future moral transitions. Moralisation is construed as the phenomenon wherein previously neutral or non-moral issues acquire moral significance, while demoralisation refers to the converse. As the research paper posits, these processes are essential to understanding how technology could engender shifts in societal values.

In particular, the research identifies two key factors—technological affordances and the emergence of societal challenges—as instrumental in driving moralisation or demoralisation processes. The author suggests that these factors collectively engender realistic possibilities within the axiological possibility space. Notably, the concept of technological affordances serves to underline how new technologies, by enabling or constraining certain behaviors, can precipitate changes in societal values. On the other hand, societal challenges are posited to stimulate moral transformations in response to shifting social dynamics. Taken together, this theoretical framework stands as an innovative schema for the anticipation of future moral change, thereby contributing to the discourse of axiological futurism.

Axiological Possibility Space and Lessons from Climate Scholarship

The concept of an axiological possibility space, as developed in the research article, operates as a predictive instrument for anticipating future value change in societal norms and morals. This space is not a projection of all hypothetical future moral changes, but rather a compilation of realistic possibilities. The author defines these realistic possibilities as those rooted in the past and present, inextricably tied to the historical conditioning of moral trends. Utilizing historical patterns of moralisation and demoralisation, the author contends that these processes, in concert with the introduction of new technologies and arising societal challenges, provide us with plausible trajectories for future moral change. As such, the axiological possibility space serves as a tool to articulate these historically grounded projections, offering a valuable contribution to the field of anticipatory ethics and, more broadly, to the philosophy of futures studies.

A central insight from the article emerges from the intersection of futures studies and climate scholarship. The author skillfully extracts lessons from the way climate change prediction models operate, particularly the CMIP models utilized by the IPCC, and their subsequent shortcomings in the face of substantial uncertainty. The idea that the intricacies of predictive modeling can sometimes overshadow the focus on potentially disastrous outcomes is critically assessed. The author contends that the realm of axiological futurism could face similar issues and hence should take heed. Notably, the call for a shift from prediction-centric frameworks to a scenario approach that can articulate the spectrum of realistic possibilities is emphasized. This scenario approach, currently being developed in climate science under the term “storyline approach,” underlines the importance of compound risks and maintains a robust focus on potentially high-impact events. The author suggests that the axiological futurist could profitably adopt a similar strategy, exploring value change in technomoral scenarios, to successfully navigate the deep uncertainties intrinsic to predicting future moral norms.

Integration into Practical Fields and Relating to Broader Philosophical Discourse

The transfer of the theoretical discussion into pragmatic fields is achieved in the research with a thoughtful examination of its potential applications, primarily in value-sensitive design. By suggesting a need for engineers to take into consideration the dynamics of moralisation and demoralisation, the author not only proposes a shift in perspective, but also creates a bridge between theoretical discourse and practical implementation. Importantly, it is argued that a future-proof design requires an assessment of the probability of embedded values shifting in moral significance over time. The research paper goes further, introducing a risk-based approach to the design process, where engineers should not merely identify likely value changes but rather seek out those changes that render the design most vulnerable from a moral perspective. The mitigation of these high-risk value changes then becomes a priority in design adaptation, solidifying the article’s argument that axiological futurism is an essential tool in technological development.

The author’s analysis also presents a substantial contribution to the broader philosophical discourse, notably the philosophy of futures studies and the ethics of technology. By integrating concepts from climatology and axiology, the work demonstrates an interdisciplinary approach that enriches philosophical discourse, emphasizing how diverse scientific fields can illuminate complex ethical issues in technology. Importantly, the work builds on and critiques the ideas of prominent thinkers like John Danaher, pushing for a more diversified and pragmatic approach in axiological futurism, rather than a singular reliance on model-based projections. The research also introduces the critical notion of “realistic possibilities” into the discourse, enriching our understanding of anticipatory ethics. It advocates for a shift in focus towards salient normative risks, drawing parallels to climate change scholarship and highlighting the necessity for anticipatory endeavours to be both scientifically plausible and ethically insightful. This approach has potential for a significant impact on philosophical studies concerning value change and the ethical implications of future technologies.

Future Research Directions

The study furnishes ample opportunities for future research in the philosophy of futures studies, particularly concerning the integration of its insights into practical fields and its implications for anticipatory ethics. The author’s exploration of axiological possibility spaces remains an open-ended endeavor; further work could be conducted to investigate the specific criteria or heuristic models that could guide ethical assessments within these spaces. The potential application of these concepts in different technological domains, beyond AI and climate change, also presents an inviting avenue of inquiry. Moreover, as the author has adopted lessons from climate scholarship, similar interdisciplinary approaches could be employed to incorporate insights from other scientific disciplines. Perhaps most intriguingly, the research introduces a call for a critical exploration of “realistic possibilities,” an area that is ripe for in-depth theoretical and empirical examination. Future research could build upon this foundational concept, investigating its broader implications, refining its methodological underpinnings, and exploring its potential impact on policy making and technological design.

Abstract

The co-shaping of technology and values is a topic of increasing interest among philosophers of technology. Part of this interest pertains to anticipating future value change, or what Danaher (2021) calls the investigation of ‘axiological futurism’. However, this investigation faces a challenge: ‘axiological possibility space’ is vast, and we currently lack a clear account of how this space should be demarcated. It stands to reason that speculations about how values might change over time should exclude farfetched possibilities and be restricted to possibilities that can be dubbed realistic. But what does this realism criterion entail? This article introduces the notion of ‘realistic possibilities’ as a key conceptual advancement to the study of axiological futurism and offers suggestions as to how realistic possibilities of future value change might be identified. Additionally, two slight modifications to the approach of axiological futurism are proposed. First, axiological futurism can benefit from a more thoroughly historicized understanding of moral change. Secondly, when employed in service of normative aims, the axiological futurist should pay specific attention to identifying realistic possibilities that come with substantial normative risks.

Future value change: Identifying realistic possibilities and risks

(Featured) Is AI the Future of Mental Healthcare?

Francesca Minerva and Alberto Giubilini engage with the intricate subject of AI implementation in the mental healthcare sector, particularly focusing on the potential benefits and challenges of its utilization. They open by setting forth the landscape of the rising demand for mental healthcare globally and articulates that the conventional therapist-centric model might not be scalable enough to meet this demand. This sets the context for exploring the use of AI in supplementing or even replacing human therapists in certain capacities. The use of AI in mental healthcare is argued to have significant advantages such as scalability, cost-effectiveness, continuous availability, and the ability to harness and analyze vast amounts of data for effective diagnosis and treatment. However, there is an explicit acknowledgment of the potential downsides such as privacy concerns, issues with personal data use and potential misuse, and the need for regulatory frameworks for monitoring and ensuring the safe and ethical use of AI in this context.

Their research subsequently delves into the issues of potential bias in healthcare, highlighting how AI could both help overcome human biases and also potentially introduce new biases into healthcare provision. It elucidates that healthcare practitioners, despite their commitment to objectivity, may be prone to biases arising from a patient’s individual and social factors, such as age, social status, and ethnic background. AI, if programmed carefully, could potentially help counteract these biases by focusing more rigidly on symptoms, yet the article also underscores that AI, being programmed by humans, could be susceptible to biases introduced in its programming. This delicate dance of bias mitigation and introduction forms a key discussion point of the article.

Their research finally broaches two critical ethical-philosophical considerations, centering around the categorization of mental health disorders and the shifting responsibilities of mental health professionals with the introduction of AI. The authors argue that existing categorizations, such as those in DSM5, may not remain adequate or relevant if AI can provide more nuanced data and behavioral cues, thus potentially necessitating a reevaluation of diagnostic categories. The issue of professional responsibility is also touched upon, wherein the challenge of assigning responsibility for AI-enabled diagnosis, especially in the light of potential errors or misdiagnoses, is critically evaluated.

The philosophical underpinning of the research article is deeply rooted in the realm of ethics, epistemology, and ontological considerations of AI in healthcare. The philosophical themes underscored in the article, such as the reevaluation of categorizations of mental health disorders and the shifting responsibilities of mental health professionals, point towards broader philosophical discourses. These revolve around how technologies like AI challenge our existing epistemic models and ethical frameworks and demand a reconsideration of our ontological understanding of subjects like disease categories, diagnosis, and treatment. The question of responsibility, and the degree to which AI systems can or should be held accountable, is a compelling case of applied ethics intersecting with technology.

Future research could delve deeper into the philosophical dimensions of AI use in psychiatry. For instance, exploring the ontological questions of mental health disorders in the age of AI could be a meaningful avenue. Also, studying the epistemic shifts in our understanding of mental health symptoms and diagnosis with AI’s increasing role could be a fascinating research area. An additional perspective could be to examine the ethical considerations in the context of AI, particularly focusing on accountability, transparency, and the changing professional responsibilities of mental health practitioners. Investigating the broader societal and cultural implications of such a shift in mental healthcare provision could also provide valuable insights.

Excerpt

Over the past decade, AI has been used to aid or even replace humans in many professional fields. There are now robots delivering groceries or working in assembling lines in factories, and there are AI assistants scheduling meetings or answering the phone line of customer services. Perhaps even more surprisingly, we have recently started admiring visual art produced by AI, and reading essays and poetry “written” by AI (Miller 2019), that is, composed by imitating or assembling human compositions. Very recently, the development of ChatGPT has shown how AI could have applications in education (Kung et al. 2023) the judicial system (Parikh et al. 2019) and the entertainment industry.

Is AI the Future of Mental Healthcare?

(Featured) A neo-aristotelian perspective on the need for artificial moral agents (AMAs)

Alejo José G. Sison and Dulce M. Redín take a critical look at the concept of autonomous moral agents (AMAs), especially in relation to artificial intelligence (AI), from a neo-Aristotelian ethical standpoint. The authors open with a compelling critique of the arguments in favor of AMAs, asserting that they are neither inevitable nor guaranteed to bring practical benefits. They elucidate that the term ‘autonomous’ may not be fitting, as AMAs are, at their core, bound to the algorithmic instructions they follow. Moreover, the term ‘moral’ is questioned due to the inherent external nature of the proposed morality. According to the authors, the true moral good is internally driven and cannot be separated from the agent nor the manner in which it is achieved.

The authors proceed to suggest that the arguments against the development of AMAs have been insufficiently considered, proposing a neo-Aristotelian ethical framework as a potential remedy. This approach places emphasis on human intelligence, grounded in biological and psychological scaffolding, and distinguishes between the categories of heterotelic production (poiesis) and autotelic action (praxis), highlighting that the former can accommodate machine operations, while the latter is strictly reserved for human actors. Further, the authors propose that this framework offers greater clarity and coherence by explicitly denying bots the status of moral agents due to their inability to perform voluntary actions.

Lastly, the authors explore the potential alignment of AI and virtue ethics. They scrutinize the potential for AI to impact human flourishing and virtues through their actions or the consequences thereof. Herein, they feature the work of Vallor, who has proposed the design of “moral machines” by embedding norms, laws, and values into computational systems, thereby, focusing on human-computer interaction. However, they caution that such an approach, while intriguing, may be inherently flawed. The authors also examine two possible ways of embedding ethics in AI: value alignment and virtue embodiment.

The research article provides an interesting contribution to the ongoing debate on the potential for AI to function as moral agents. The authors adopt a neo-Aristotelian ethical framework to add depth to the discourse, providing a fresh perspective that integrates virtue ethics and emphasizes the role of human agency. This perspective brings to light the broader philosophical questions around the very nature of morality, autonomy, and the distinctive attributes of human intelligence.

Future research avenues might revolve around exploring more extensively how virtue ethics can interface with AI and if the goals that Vallor envisages can be realistically achieved. Further philosophical explorations around the assumptions of agency and morality in AI are also needed. Moreover, studies examining the practical implications of the neo-Aristotelian ethical framework, especially in the realm of human-computer interaction, would be invaluable. Lastly, it may be insightful to examine the authors’ final suggestion of approaching AI as a moral agent within the realm of fictional ethics, a proposal that opens up a new and exciting area of interdisciplinary research between philosophy, AI, and literature.

Abstract

We examine Van Wynsberghe and Robbins (JAMA 25:719-735, 2019) critique of the need for Artificial Moral Agents (AMAs) and its rebuttal by Formosa and Ryan (JAMA 10.1007/s00146-020-01089-6, 2020) set against a neo-Aristotelian ethical background. Neither Van Wynsberghe and Robbins (JAMA 25:719-735, 2019) essay nor Formosa and Ryan’s (JAMA 10.1007/s00146-020-01089-6, 2020) is explicitly framed within the teachings of a specific ethical school. The former appeals to the lack of “both empirical and intuitive support” (Van Wynsberghe and Robbins 2019, p. 721) for AMAs, and the latter opts for “argumentative breadth over depth”, meaning to provide “the essential groundwork for making an all things considered judgment regarding the moral case for building AMAs” (Formosa and Ryan 2019, pp. 1–2). Although this strategy may benefit their acceptability, it may also detract from their ethical rootedness, coherence, and persuasiveness, characteristics often associated with consolidated ethical traditions. Neo-Aristotelian ethics, backed by a distinctive philosophical anthropology and worldview, is summoned to fill this gap as a standard to test these two opposing claims. It provides a substantive account of moral agency through the theory of voluntary action; it explains how voluntary action is tied to intelligent and autonomous human life; and it distinguishes machine operations from voluntary actions through the categories of poiesis and praxis respectively. This standpoint reveals that while Van Wynsberghe and Robbins may be right in rejecting the need for AMAs, there are deeper, more fundamental reasons. In addition, despite disagreeing with Formosa and Ryan’s defense of AMAs, their call for a more nuanced and context-dependent approach, similar to neo-Aristotelian practical wisdom, becomes expedient.

A neo-aristotelian perspective on the need for artificial moral agents (AMAs)

(Featured) Autonomous AI Systems in Conflict: Emergent Behavior and Its Impact on Predictability and Reliability

Daniel Trusilo investigates the concept of emergent behavior in complex autonomous systems and its implications in dynamic, open context environments such as conflict scenarios. In a nuanced exploration of the intricacies of autonomous systems, the author employs two hypothetical case studies—an intelligence, surveillance, and reconnaissance (ISR) maritime swarm system and a next-generation autonomous humanitarian notification system—to articulate and elucidate the effects of emergent behavior.

In the case of the ISR swarm system, the author underscores how the autonomous algorithm’s unpredictable micro-level behavior can yield reliable macro-level outcomes, enhancing the system’s robustness and resilience against adversarial interventions. Conversely, the humanitarian notification system emphasizes how such systems’ unpredictability can fortify International Humanitarian Law (IHL) compliance, reducing civilian harm, and increasing accountability. Thus, the author emphasizes the dichotomy of emergent behavior: it enhances system reliability and effectiveness while posing novel challenges to predictability and system certification.

Navigating these challenges, the author calls attention to the implications for system certification and ethical interoperability. With the potential for these systems to exhibit unforeseen behavior in actual operations, traditional testing, evaluation, verification, and validation methods seem inadequate. Instead, the author suggests adopting dynamic certification methods, allowing the systems to be continually monitored and adjusted in complex, real-world environments, thereby accommodating emergent behavior. Ethical interoperability, the concurrence of ethical AI principles across different organizations and nations, presents another conundrum, especially with differing ethical guidelines governing AI use in defense.

In its broader philosophical framework, the article contributes to the ongoing discourse on the ethics and morality of AI and autonomous systems, particularly within the realm of futures studies. It underscores the tension between the benefits of autonomous systems and the ethical, moral, and practical challenges they pose. The emergent behavior phenomenon can be seen as a microcosm of the larger issues in AI ethics, reflecting on themes of predictability, control, transparency, and accountability. The navigation of these ethical quandaries implies the need for shared ethical frameworks and standards that can accommodate the complex, unpredictable nature of these systems without compromising the underlying moral principles.

In terms of future research, there are several critical avenues to explore. The implications of emergent behavior in weaponized autonomous systems need careful examination, questioning acceptable risk confidence intervals for such systems’ predictability and reliability. Moreover, the impact of emergent behavior on operator trust and the ongoing issue of machine explainability warrants further exploration. Lastly, it would be pertinent to identify methods of certifying complex autonomous systems while addressing the burgeoning body of distinct, organization-specific ethical AI principles. Such endeavors would help operationalize these principles in light of emergent behavior, thereby contributing to the development of responsible, accountable, and effective AI systems.

Abstract

The development of complex autonomous systems that use artificial intelligence (AI) is changing the nature of conflict. In practice, autonomous systems will be extensively tested before being operationally deployed to ensure system behavior is reliable in expected contexts. However, the complexity of autonomous systems means that they will demonstrate emergent behavior in the open context of real-world conflict environments. This article examines the novel implications of emergent behavior of autonomous AI systems designed for conflict through two case studies. These case studies include (1) a swarm system designed for maritime intelligence, surveillance, and reconnaissance operations, and (2) a next-generation humanitarian notification system. Both case studies represent current or near-future technology in which emergent behavior is possible, demonstrating that such behavior can be both unpredictable and more reliable depending on the level at which the system is considered. This counterintuitive relationship between less predictability and more reliability results in unique challenges for system certification and adherence to the growing body of principles for responsible AI in defense, which must be considered for the real-world operationalization of AI designed for conflict environments.

Autonomous AI Systems in Conflict: Emergent Behavior and Its Impact on Predictability and Reliability

(Featured) Algorithmic discrimination in the credit domain: what do we know about it?

Ana Cristina Bicharra Garcia et al. explore a salient issue in today’s world of ubiquitous artificial intelligence (AI) and machine learning (ML) applications — the intersection of algorithmic decision-making, fairness, and discrimination in the credit domain. Undertaking a systematic literature review from five data sources, the study meticulously categorizes, analyzes, and synthesizes a wide array of existing literature on this topic. Out of an initial 1320 papers identified, 78 were eventually selected for a detailed review.

The research identifies and critically assesses the inherent biases and potential discriminatory practices in algorithmic credit decision systems, particularly regarding race, gender, and other sensitive attributes. A key observation noted is the existing tendency of studies to examine discriminatory effects based on single sensitive attributes. However, the authors highlight the relevance of Kimberlé Crenshaw’s intersectionality theory, which emphasizes the complex layers of discrimination that could emerge when multiple attributes intersect. The study further underscores the issue of ‘reverse redlining’ — a form of discrimination where individuals are either denied credit based on specific attributes or targeted with high-interest loans.

In addition to mapping the landscape of algorithmic fairness and discrimination, the authors offer a critical examination of fairness definitions, technical limitations of fair algorithms, and the challenging equilibrium between data privacy and data sources’ broadening. The authors’ exploration of fairness reveals a lack of consensus on its definition. In fact, the diverse metrics available often lead to contradictory outcomes. Technical actions, the authors assert, have boundaries, and a genuinely discrimination-free environment requires not just fair algorithms, but also structural and societal changes.

In a broader philosophical context, the research paper’s exploration of algorithmic fairness and discrimination in the credit domain harks back to a fundamental question in the philosophy of technology: What is the impact of technology on society and individual human beings? Algorithmic decision-making systems, as exemplified in this research, are not neutral tools; they are imbued with the biases and prejudices of the society they emerge from, raising significant ethical concerns. The credit domain, with its inherent power dynamics and implications on individuals’ livelihoods, serves as a potent illustration of how algorithmic biases can exacerbate societal inequalities. The philosophical debate around the agency of technology, the moral responsibilities of developers and users, and the consequences of technologically mediated discrimination is thereby highly relevant.

As for future research directions, this study presents multiple avenues. A pressing need is the exploration of discrimination scope beyond race, gender, and commonly studied categories. More nuanced understanding of intersectionality in algorithmic discrimination, including the examination of multiple attributes simultaneously, is a vital need. Additionally, further exploration of ‘reverse redlining’, particularly in the Global South, is warranted. A compelling challenge is to arrive at a globally accepted definition of fairness, taking into account the cultural differences that influence societal perceptions. Lastly, the ethical implications of expanding data sources for credit evaluation, while preserving individuals’ privacy, merit in-depth scrutiny. Through these avenues, we can aspire to develop more ethical, fair, and inclusive algorithmic systems, thus addressing the philosophical concerns highlighted above.

Abstract

Many modern digital products use Machine Learning (ML) to emulate human abilities, knowledge, and intellect. In order to achieve this goal, ML systems need the greatest possible quantity of training data to allow the Artificial Intelligence (AI) model to develop an understanding of “what it means to be human”. We propose that the processes by which companies collect this data are problematic, because they entail extractive practices that resemble labour exploitation. The article presents four case studies in which unwitting individuals contribute their humanness to develop AI training sets. By employing a post-Marxian framework, we then analyse the characteristic of these individuals and describe the elements of the capture-machine. Then, by describing and characterising the types of applications that are problematic, we set a foundation for defining and justifying interventions to address this form of labour exploitation.

Algorithmic discrimination in the credit domain: what do we know about it?

(Featured) Epistemic diversity and industrial selection bias

Manuela Fernández Pinto and Daniel Fernández Pinto offer a compelling examination of the role that funding sources play in shaping scientific consensus, focusing specifically on the influence of private industry. Drawing on the work of Holman and Bruner (2017), the authors use a reinforcement learning model, known as a Q-learning model, to explore industrial selection. The central concept of industrial selection posits that, rather than corrupting individual scientists, private industry can subtly steer scientific outcomes towards their interests by selectively funding research. In the authors’ simulation, three different funding scenarios are considered: research funded solely by industry, research funded solely by a random agent, and research jointly funded by industry and a random agent.

Results from the simulations reinforce the effects of industrial selection observed by Holman and Bruner, showing a divergence from correct scientific hypotheses under sole industry funding. When scientists are funded solely by a random agent, the outcomes are closer to the correct hypothesis. Most notably, when funding is a mix of industry and random allocation, the random element appears to counteract, or at least delay, the bias introduced by industry funding. The authors further observe an unexpected and somewhat paradoxical interaction with methodological diversity, a factor traditionally seen as a strength in scientific communities. Industrial funding effectively exploits this diversity to skew consensus towards industry-friendly outcomes.

The authors then introduce a provocative and potentially contentious suggestion based on their simulations. They propose that a random allocation of funding might be a more effective countermeasure against industrial selection bias than the commonly held belief in meritocratic funding systems, which might inadvertently perpetuate industry bias. This suggestion arises from their observation that a random funding agent in the simulation effectively obstructs industrial selection bias. They also consider the merits and drawbacks of a two-stage random allocation system, wherein only research proposals that pass an initial quality assessment are subject to a subsequent funding lottery.

The study raises compelling philosophical considerations on the influence of funding on the direction and integrity of scientific research. It challenges the common narrative that methodological diversity and meritocracy inherently lead to unbiased, high-quality science, suggesting instead that these can be co-opted by industry to push scientific consensus toward commercially advantageous outcomes. It further incites reflection on the ethical implications of allowing commercial interests to potentially manipulate scientific consensus and the responsibility of society to ensure the pursuit of truth in science. The research also ties into broader discussions on the balance between rational decision-making and randomness, and the potential role of randomness as a mitigating factor in decision-making processes rife with bias or undue influence.

Future research could delve deeper into how a random allocation system might be implemented in practice, particularly regarding the initial quality assessment process. It would also be beneficial to explore how such a system could coexist with traditional funding sources, and what percentage of overall funding would need to be randomly allocated to effectively mitigate industrial selection bias. Additionally, more nuanced simulations could help further untangle the complex relationship between methodological diversity and industrial bias, and identify other possible factors that may be manipulated to sway scientific consensus. Ultimately, this research presents a provocative stepping stone for further exploration into the complex and subtle ways commercial interests may influence scientific research, and potential innovative strategies to counteract such influences.

Abstract

Philosophers of science have argued that epistemic diversity is an asset for the production of scientific knowledge, guarding against the effects of biases, among other advantages. The growing privatization of scientific research, on the contrary, has raised important concerns for philosophers of science, especially with respect to the growing sources of biases in research that it seems to promote. Recently, Holman and Bruner (2017) have shown, using a modified version of Zollman (2010) social network model, that an industrial selection bias can emerge in a scientific community, without corrupting any individual scientist, if the community is epistemically diverse. In this paper, we examine the strength of industrial selection using a reinforcement learning model, which simulates the process of industrial decision-making when allocating funding to scientific projects. Contrary to Holman and Bruner’s model, in which the probability of success of the agents when performing an action is given a priori, in our model the industry learns about the success rate of individual scientists and updates the probability of success on each round. The results of our simulations show that even without previous knowledge of the probability of success of an individual scientist, the industry is still able to disrupt scientific consensus. In fact, the more epistemically diverse the scientific community, the easier it is for the industry to move scientific consensus to the opposite conclusion. Interestingly, our model also shows that having a random funding agent seems to effectively counteract industrial selection bias. Accordingly, we consider the random allocation of funding for research projects as a strategy to counteract industrial selection bias, avoiding commercial exploitation of epistemically diverse communities.

Epistemic diversity and industrial selection bias