(Relevant Literature) Philosophy of Futures Studies: July 9th, 2023 – July 15th, 2023

Should Artificial Intelligence be used to support clinical ethical decision-making? A systematic review of reasons

Abstract

Should Artificial Intelligence be used to support clinical ethical decision-making? A systematic review of reasons

“Healthcare providers have to make ethically complex clinical decisions which may be a source of stress. Researchers have recently introduced Artificial Intelligence (AI)-based applications to assist in clinical ethical decision-making. However, the use of such tools is controversial. This review aims to provide a comprehensive overview of the reasons given in the academic literature for and against their use.”

Do Large Language Models Know What Humans Know?

Abstract

Do Large Language Models Know What Humans Know?

“Humans can attribute beliefs to others. However, it is unknown to what extent this ability results from an innate biological endowment or from experience accrued through child development, particularly exposure to language describing others’ mental states. We test the viability of the language exposure hypothesis by assessing whether models exposed to large quantities of human language display sensitivity to the implied knowledge states of characters in written passages. In pre-registered analyses, we present a linguistic version of the False Belief Task to both human participants and a large language model, GPT-3. Both are sensitive to others’ beliefs, but while the language model significantly exceeds chance behavior, it does not perform as well as the humans nor does it explain the full extent of their behavior—despite being exposed to more language than a human would in a lifetime. This suggests that while statistical learning from language exposure may in part explain how humans develop the ability to reason about the mental states of others, other mechanisms are also responsible.”

Scientific understanding through big data: From ignorance to insights to understanding

Abstract

Scientific understanding through big data: From ignorance to insights to understanding

“Here I argue that scientists can achieve some understanding of both the products of big data implementation as well as of the target phenomenon to which they are expected to refer –even when these products were obtained through essentially epistemically opaque processes. The general aim of the paper is to provide a road map for how this is done; going from the use of big data to epistemic opacity (Sec. 2), from epistemic opacity to ignorance (Sec. 3), from ignorance to insights (Sec. 4), and finally, from insights to understanding (Sec. 5, 6).”

Ethics of Quantum Computing: an Outline

Abstract

Ethics of Quantum Computing: an Outline

“This paper intends to contribute to the emerging literature on the ethical problems posed by quantum computing and quantum technologies in general. The key ethical questions are as follows: Does quantum computing pose new ethical problems, or are those raised by quantum computing just a different version of the same ethical problems raised by other technologies, such as nanotechnologies, nuclear plants, or cloud computing? In other words, what is new in quantum computing from an ethical point of view? The paper aims to answer these two questions by (a) developing an analysis of the existing literature on the ethical and social aspects of quantum computing and (b) identifying and analyzing the main ethical problems posed by quantum computing. The conclusion is that quantum computing poses completely new ethical issues that require new conceptual tools and methods.”

On The Social Complexity of Neurotechnology: Designing A Futures Workshop For The Exploration of More Just Alternative Futures

Abstract

On The Social Complexity of Neurotechnology: Designing A Futures Workshop For The Exploration of More Just Alternative Futures

Novel technologies like artificial intelligence or neurotechnology are expected to have social implications in the future. As they are in the early stages of development, it is challenging to identify potential negative impacts that they might have on society. Typically, assessing these effects relies on experts, and while this is essential, there is also a need for the active participation of the wider public, as they might also contribute relevant ideas that must be taken into consideration. This article introduces an educational futures workshop called Spark More Just Futures, designed to act as a tool for stimulating critical thinking from a social justice perspective based on the Capability Approach. To do so, we first explore the theoretical background of neurotechnology, social justice, and existing proposals that assess the social implications of technology and are based on the Capability Approach. Then, we present a general framework, tools, and the workshop structure. Finally, we present the results obtained from two slightly different versions (4 and 5) of the workshop. Such results led us to conclude that the designed workshop succeeded in its primary objective, as it enabled participants to discuss the social implications of neurotechnology, and it also widened the social perspective of an expert who participated. However, the workshop could be further improved.

Misunderstandings around Posthumanism. Lost in Translation? Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto”

Abstract

Misunderstandings around Posthumanism. Lost in Translation? Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto”

Posthumanism is still a largely debated new field of contemporary philosophy that mainly aims at broadening the Humanist perspective. Academics, researchers, scientists, and artists are constantly transforming and evolving theories and arguments, around the existing streams of Posthumanist thought, Critical Posthumanism, Transhumanism, Metahumanism, discussing whether they can finally integrate or follow completely different paths towards completely new directions. This paper, written for the 1st Metahuman Futures Forum (Lesvos 2022) will focus on Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto” (2022) mainly as an open dialogue with Critical Posthumanism.

IMAGINABLE FUTURES: A Psychosocial Study On Future Expectations And Anthropocene

Abstract

IMAGINABLE FUTURES: A Psychosocial Study On Future Expectations And Anthropocene

The future has become the central time of Anthropocene due to multiple factors like climate crisis emergence, war, and COVID times. As a social construction, time brings a diversity of meanings, measures, and concepts permeating all human relations. The concept of time can be studies in a variety of fields, but in Social Psychology, time is the bond for all social relations. To understand Imaginable Futures as narratives that permeate human relations requires the discussion of how individuals are imagining, anticipating, and expecting the future. According to Kable et al. (2021), imagining future events activates two brain networks. One, which focuses on creating the new event within imagination, whereas the other evaluates whether the event is positive or negative. To further investigate this process, a survey with 40 questions was elaborated and applied to 312 individuals across all continents. The results show a relevant rupture between individual and global futures. Data also demonstrates that the future is an important asset of the now, and participants are not so optimistic about it. It is possible to notice a growing preoccupation with the global future and the uses of technology.

Taking AI risks seriously: a new assessment model for the AI Act

Abstract

Taking AI risks seriously: a new assessment model for the AI Act

“The EU Artificial Intelligence Act (AIA) defines four risk categories: unacceptable, high, limited, and minimal. However, as these categories statically depend on broad fields of application of AI, the risk magnitude may be wrongly estimated, and the AIA may not be enforced effectively. This problem is particularly challenging when it comes to regulating general-purpose AI (GPAI), which has versatile and often unpredictable applications. Recent amendments to the compromise text, though introducing context-specific assessments, remain insufficient. To address this, we propose applying the risk categories to specific AI scenarios, rather than solely to fields of application, using a risk assessment model that integrates the AIA with the risk approach arising from the Intergovernmental Panel on Climate Change (IPCC) and related literature. This integrated model enables the estimation of AI risk magnitude by considering the interaction between (a) risk determinants, (b) individual drivers of determinants, and (c) multiple risk types. We illustrate this model using large language models (LLMs) as an example.”

Creating a large language model of a philosopher

Abstract

Creating a large language model of a philosopher

“Can large language models produce expert-quality philosophical texts? To investigate this, we fine-tuned GPT-3 with the works of philosopher Daniel Dennett. To evaluate the model, we asked the real Dennett 10 philosophical questions and then posed the same questions to the language model, collecting four responses for each question without cherry-picking. Experts on Dennett’s work succeeded at distinguishing the Dennett-generated and machine-generated answers above chance but substantially short of our expectations. Philosophy blog readers performed similarly to the experts, while ordinary research participants were near chance distinguishing GPT-3’s responses from those of an ‘actual human philosopher’.”

(Featured) ChatGPT: deconstructing the debate and moving it forward

Mark Coeckelbergh’s and David J. Gunkel’s critical analysis compels us to reevaluate our understanding of authorship, language, and the generation of meaning in the realm of Artificial Intelligence. The analysis of ChatGPT extrapolates beyond a mere understanding of the model as an algorithmic tool, but rather as an active participant in the construction of language and meaning, challenging longstanding preconceptions around authorship. The key argument lies in the subversion of traditional metaphysics, offering a vantage point from which to reinterpret the role of language and ethics in AI.

The research further offers a critique of Platonic metaphysics, which has historically served as the underpinning for many normative questions. The authors advance an anti-foundationalist perspective, suggesting that the performances and the materiality of text, inherently, possess and create their own meaning and value. The discourse decouples questions of ethics and semantics from their metaphysical moorings, thereby directly challenging traditional conceptions of moral and semantic authority.

Contextualizing the ChatGPT

The examination of ChatGPT provides a distinct perspective on the ways AI can be seen as a participant in authorship and meaning-making processes. Grounded in the extensive training data and iterative development of the model, the role of the AI is reframed, transgressing the conventional image of AI as an impersonal tool for human use. The underlying argument asserts the importance of acknowledging the role of AI in not only generating text but also in constructing meaning, thereby influencing the larger context in which it operates. In doing so, the article probes the interplay between large language models, authorship, and the very nature of language, reflecting on the ethical and philosophical considerations intertwined within.

The discourse contextualizes the subject within the framework of linguistic performativity, emphasizing the transformative dynamics of AI in our understanding of authorship and text generation. Specifically, the authors argue that in the context of ChatGPT, authorship is diffused, moving beyond the sole dominion of the human user to a shared responsibility with the AI system. The textual productions of AI become not mere reflections of pre-established human language patterns, but also active components in the construction of new narratives and meaning. This unique proposition incites a paradigm shift in our understanding of large language models, and the author provides a substantive foundation for this perspective within the framework of the research.

Anti-foundationalism, Ethical Pluralism and AI

The authors champion a view of language and meaning as a contingent, socially negotiated construct, thereby challenging the Platonic metaphysical model that prioritizes absolute truth or meaning. Within the sphere of AI, this perspective disavows the idea of a univocal foundation for value and meaning, asserting instead that AI systems like ChatGPT contribute to meaning-making processes in their interactions and performances. This stance, while likely to incite concerns of relativism, is supported by scholarly concepts such as ethical pluralism and an appreciation of diverse standards, which envision shared norms coexisting with a spectrum of interpretations. The authors extend this philosophical foundation to the development of large language models, arguing for an ethical approach that forefronts the needs and values of a diverse range of stakeholders in the evolution of this technology.

A central theme of the authors’ exploration is the application of ethical pluralism within AI technologies, specifically large language models (LLMs) like ChatGPT. This approach, inherently opposed to any absolute metaphysics, prioritizes cooperation, respect, and continuous renewal of standards. As the authors propose, it’s not about the unilateral decision-making rooted in absolutist beliefs, but rather about co-creation and negotiation of what is acceptable and desirable in a society that is as diverse as its ever-evolving standards. It underscores the role of technologies such as ChatGPT as active agents in the co-construction of meaning, emphasising the need for these technologies to be developed and used responsibly. This responsibility, according to the author, should account for the needs and values of a range of stakeholders, both human and non-human, thus incorporating a wider ethical concern into the AI discourse.

A Turn Towards Responsibility and Future Research Directions

Drawing from the philosophies of Levinas, the authors advocate for a dramatic change in approach, proposing that instead of basing the principles on metaphysical foundations, they should spring from ethical considerations. The authors argue that this shift is a critical necessity for preventing technological practices from devolving into power games. Here, the notion of responsibility extends beyond human agents and encompasses non-human otherness as well, implying a clear departure from traditional anthropocentric paradigms. This proposal requires recognizing the social and technological generation of truth and meaning, acknowledging the performative power structures embedded in technology, and considering the capability to respond to a broad range of others. Consequently, this outlook presents a forward-looking perspective on the ethics and politics of AI technologies, emphasizing the necessity for democratic discussion, ethical reflection, and acknowledgment of their primary role in shaping the path of AI.

This’ critical approach shifts the discourse from the metaphysical to ethical and political questions, prompting considerations about the nature of “good” performances and processes, and the factors determining them. Future investigations should further probe the relationship between power, technology, and authorship, with emphasis on the dynamics of exclusion and marginalization in these processes. The author calls for practical effort and empirical research to uncover the human and nonhuman labour involved in AI technologies, and to examine the fairness of existing decision-making processes. This nexus between technology, philosophy, and language invites interdisciplinary and transdisciplinary inquiries, encompassing fields such as philosophy, linguistics, literature, and more. The authors’ assertions reframe the understanding of authorship and language in the age of AI, presenting a call for a more comprehensive exploration of these interrelated domains in the context of advanced technologies like ChatGPT.

Abstract

Large language models such as ChatGPT enable users to automatically produce text but also raise ethical concerns, for example about authorship and deception. This paper analyses and discusses some key philosophical assumptions in these debates, in particular assumptions about authorship and language and—our focus—the use of the appearance/reality distinction. We show that there are alternative views of what goes on with ChatGPT that do not rely on this distinction. For this purpose, we deploy the two phased approach of deconstruction and relate our finds to questions regarding authorship and language in the humanities. We also identify and respond to two common counter-objections in order to show the ethical appeal and practical use of our proposal.

ChatGPT: deconstructing the debate and moving it forward

(Featured) On Artificial Intelligence and Manipulation

On the ethics of emerging technologies, Marcello Ienca critically examines the role of digital technologies, particularly artificial intelligence, in facilitating manipulation. This research involves a comprehensive analysis of the nature of manipulation, its manifestation in the digital realm, impacts on human agency, and the ethical ramifications thereof. The findings illuminate the nuanced interplay between technology, manipulation, and ethics, situating the discussion about technology within the broader philosophical discourse.

Ienca distinguishes between concepts of persuasion and manipulation, underscoring the role of rational defenses in bypassing effective manipulation. Furthermore, they unpack how artificial intelligence and other digital technologies contribute to manipulation, with a detailed exploration of tactics such as personalization, emotional appeal, social influence, repetition, trustworthiness, user awareness, and time constraints. Finally, they propose a set of mitigation strategies, including regulatory, technical, and ethical approaches, that aim to protect users from manipulation.

The Nature of Manipulation

Within the discourse on digital ethics, the issue of manipulation has garnered notable attention. Ienca begins with an account of manipulation, revealing its layered complexity. They distinguish manipulation from persuasion, contending that while both aim to alter behavior or attitudes, manipulation uniquely bypasses the rational defenses of the subject. They posit that manipulation’s unethical nature emerges from this bypassing, as it subverts the individual’s autonomy. While persuasion is predicated on providing reasons, manipulation strategically leverages non-rational influence to shape behavior or attitudes. The author, thus, highlights the ethical chasm between these two forms of influence.

Building on this, the author contends that manipulation becomes especially potent in digital environments, given the technological means at disposal. Digital technologies, such as AI, facilitate an unprecedented capacity to bypass rational defenses by harnessing a broad repertoire of tactics, including personalized messaging, emotional appeal, and repetition. These tactics, which exploit the cognitive vulnerabilities of individuals, are coupled with the broad reach and immediate feedback afforded by digital platforms, magnifying the scope and impact of manipulation. As such, Ienca’s research contributes to a deeper understanding of the nature of digital manipulation and its divergence from the concept of persuasion.

Digital Technologies and the Unraveling of Manipulation

Ienca critically engages with the symbiotic relationship between digital technologies and manipulation. They elucidate that contemporary platforms, such as social media and search engines, employ personalized algorithms to curate user experiences. While such personalization is often marketed as enhancing user satisfaction, the author contends it serves as a conduit for manipulation. These algorithms invisibly mould user preferences and beliefs, thereby posing a potent threat to personal autonomy. The authors extend this analysis to AI technologies as well. A key dimension of their argument is the delineation of “black-box” AI systems, which make decisions inexplicably, leaving users susceptible to undisclosed manipulative tactics. The inability to scrutinize the processes underpinning these decisions amplifies their potential to manipulate users. The author’s analysis thus illuminates the subversive role digital technologies play in exacerbating the risk of manipulation, informing a nuanced understanding of the ethical complexities inherent to digital environments.

Ienca posits that such manipulation essentially thrives on two key elements – informational asymmetry and cognitive bias exploitation. Informational asymmetry is established when the algorithms controlling digital environments wield extensive knowledge about the user, engendering a power imbalance. This understanding is used to shape user experience subtly, enhancing the susceptibility to manipulation. The exploitation of cognitive biases further solidifies this manipulation by capitalizing on inherent human tendencies, thus subtly directing user choices. An example provided is the use of default settings, which exploit the status quo bias and contribute to passive consent, a potent form of manipulation. The author’s exploration of these elements illustrates the insidious mechanisms by which digital manipulation functions, enriching our understanding of the dynamics at play within digital landscapes.

Mitigation Strategies for Digital Manipulation and the Broader Philosophical Discourse

Ienca proposes a multi-pronged strategy to curb the pervasiveness of digital manipulation, relying significantly on user education and digital literacy, contending that informed users can better identify and resist manipulation attempts. Transparency, particularly around the use of algorithms and data processing practices, is also stressed, facilitating users’ understanding of their data’s utilization. From a regulatory standpoint, the authors discuss the role of governing bodies in enforcing laws that protect user privacy and promote transparency and accountability. The EU AI Act (2021) is highlighted as a significant stride in this direction. The authors also advocate for ethical design, suggesting that prioritizing user cognitive liberty, privacy, transparency, and control in digital technology can reduce manipulation potential. They also highlight the potential of policy proposals aimed at enshrining a neuroright to cognitive liberty and mental integrity. In their collective approach, Ienca and Vayena synthesize technical, regulatory, and ethical strategies, underscoring the necessity of cooperation among multiple stakeholders to cultivate a safer digital environment.

This study on digital manipulation connects to a broader philosophical discourse surrounding the ethics of technology and information dissemination, particularly in the age of proliferating artificial intelligence. It is situated at the intersection of moral philosophy, moral psychology, and the philosophy of technology, inquiring into the agency and autonomy of users within digital spaces and the ethical responsibility of technology designers. The discussion on ‘neurorights’ brings to the fore the philosophical debate on personal freedom and cognitive liberty, reinforcing the question of how these rights ought to be defined and protected in a digitized world. The author’s consideration of manipulation, not as an anomaly, but as an inherent characteristic of pre-designed digital environments challenges traditional understanding of free will and consent in these spaces. This work contributes to the broader discourse on the power dynamics between technology users and creators, a topic of increasing relevance as AI and digital technologies become ubiquitous.

Abstract

The increasing diffusion of novel digital and online sociotechnical systems for arational behavioral influence based on Artificial Intelligence (AI), such as social media, microtargeting advertising, and personalized search algorithms, has brought about new ways of engaging with users, collecting their data and potentially influencing their behavior. However, these technologies and techniques have also raised concerns about the potential for manipulation, as they offer unprecedented capabilities for targeting and influencing individuals on a large scale and in a more subtle, automated and pervasive manner than ever before. This paper, provides a narrative review of the existing literature on manipulation, with a particular focus on the role of AI and associated digital technologies. Furthermore, it outlines an account of manipulation based of four key requirements: intentionality, asymmetry of outcome, non-transparency and violation of autonomy. I argue that while manipulation is not a new phenomenon, the pervasiveness, automaticity, and opacity of certain digital technologies may raise a new type of manipulation, called “digital manipulation”. I call “digital manipulation” any influence exerted through the use of digital technology that is intentionally designed to bypass reason and to produce an asymmetry of outcome between the data processor (or a third party that benefits thereof) and the data subject. Drawing on insights from psychology, sociology, and computer science, I identify key factors that can make manipulation more or less effective, and highlight the potential risks and benefits of these technologies for individuals and society. I conclude that manipulation through AI and associated digital technologies is not qualitatively different from manipulation through human–human interaction in the physical world. However, some functional characteristics make it potentially more likely of evading the subject’s cognitive defenses. This could increase the probability and severity of manipulation. Furthermore, it could violate some fundamental principles of freedom or entitlement related to a person’s brain and mind domain, hence called neurorights. To this end, an account of digital manipulation as a violation of the neuroright to cognitive liberty is presented.

On Artificial Intelligence and Manipulation

(Featured) Farewell to humanism? Considerations for nursing philosophy and research in posthuman times

Olga Petrovskaya explores a groundbreaking domain: the application of posthumanist philosophy within the nursing field. By proposing an innovative perspective on the relational dynamics between humans and non-humans in healthcare, Petrovskaya illuminates the future possibilities of nursing in an increasingly complex and interconnected world. The research critically unpacks the conventional anthropocentric paradigm predominant in nursing and provides an alternative posthumanist framework to understand nursing practices. Thus, the importance of this work lies not merely in its contribution to nursing studies but also to the philosophy of futures studies.

Petrovskaya’s inquiry into posthumanist thought is a deep examination of the conventional humanist traditions and their limitations in contemporary healthcare. The research suggests that posthumanism, with its rejection of human-centric superiority and endorsement of complex human-nonhuman interrelations, offers a viable path to reformulate nursing practice. In doing so, the author nudges the academic and professional nursing community to rethink their conventional approaches and consider new methodologies that incorporate posthumanist ideas. As such, Petrovskaya’s work establishes a critical juncture in the discourse of futures studies, heralding a transformative approach to nursing.

Nursing and the Posthumanist Paradigm

Petrovskaya takes significant strides to unpack the posthumanist paradigm, emphasizing its pivotal role in reshaping the field of nursing. Posthumanism, as the author illustrates, moves away from the anthropocentric bias of traditional humanism, challenging the supremacy of human reason and universalism. This shift to a more inclusive and egalitarian lens transcends the human/non-human divide, acknowledging the intertwined assemblages of humans and non-human elements. Petrovskaya’s discussion of the posthumanist perspective further exposes the oppressive tendencies and environmental degradation tied to humanism’s colonial, sexist, and racist underpinnings. With its more nuanced approach to understanding the complex relationships between humans and non-human entities, posthumanism underscores the importance of material practices and the fluidity of subjectivities. Petrovskaya’s contribution is thus seminal in bridging this philosophical discourse with nursing practices, facilitating a more comprehensive understanding of their implications and potential transformations.

The application of posthumanist perspectives to nursing has substantial implications for the practice. Through her paper, Petrovskaya brings to light the dynamism and fluidity of nursing practices, suggesting they are not predetermined but are spaces where various versions of the human are formed and contested. This conceptualization echoes the posthumanist emphasis on the evolving nature of subjectivities and positions nursing practices as active agents in the production of these subjectivities. The idea of nursing practices as “worlds in the making” is a potent illustration of this agency, denoting not only a change in perspective but also a fundamental shift in understanding the role and function of nursing within the broader socio-cultural and philosophical context.

Futures of Philosophy and Nursing

The juxtaposition of philosophy and nursing in Petrovskaya’s research further extends the domain of nursing beyond its practical roots and illuminates its deep engagement with philosophical thought. Petrovskaya’s survey of various philosophical works, especially those underrepresented in Western philosophical discourse, underscores the importance of diversity in philosophical thought for nursing studies. Notable philosophers like Wollstonecraft, de Gouges, Yacob, and Amo, despite their contributions, often remain on the margins of mainstream philosophical discourse, mirroring the marginalization faced by nursing as a discipline in academic circles. Spinoza’s work, in particular, holds potential for fostering new insights into nursing practices, given its significance in shaping critical posthumanist thought. Petrovskaya’s work thereby serves as a catalyst for nurse scholars to engage more deeply with alternative philosophies, fostering a more inclusive, diverse, and nuanced understanding of nursing in posthuman times.

Petrovskaya’s research is especially pertinent to futures studies, an interdisciplinary field engaged with critical exploration of possible, plausible, and preferable futures. As the study positions nursing within a posthumanist context, it implicitly challenges the conventional anthropocentric worldview and opens the door to a future where human-nonhuman assemblages are central to the understanding of subjectivities and practice outcomes. These propositions represent a radical shift from current paradigms, setting the stage for a future where the entanglement of humans and nonhumans is recognized and embraced rather than ignored or oversimplified. The novel methodologies that Petrovskaya advocates for studying these assemblages can potentially drive futures studies towards more nuanced, complex, and inclusive explorations of what future nursing practices—and, by extension, human society—might look like.

Abstract

In this paper, I argue that critical posthumanism is a crucial tool in nursing philosophy and scholarship. Posthumanism entails a reconsideration of what ‘human’ is and a rejection of the whole tradition founding Western life in the 2500 years of our civilization as narrated in founding texts and embodied in governments, economic formations and everyday life. Through an overview of historical periods, texts and philosophy movements, I problematize humanism, showing how it centres white, heterosexual, able-bodied Man at the top of a hierarchy of beings, and runs counter to many current aspirations in nursing and other disciplines: decolonization, antiracism, anti-sexism and Indigenous resurgence. In nursing, the term humanism is often used colloquially to mean kind and humane; yet philosophically, humanism denotes a Western philosophical tradition whose tenets underpin much of nursing scholarship. These underpinnings of Western humanism have increasingly become problematic, especially since the 1960s motivating nurse scholars to engage with antihumanist and, recently, posthumanist theory. However, even current antihumanist nursing arguments manifest deep embeddedness in humanistic methodologies. I show both the problematic underside of humanism and critical posthumanism’s usefulness as a tool to fight injustice and examine the materiality of nursing practice. In doing so, I hope to persuade readers not to be afraid of understanding and employing this critical tool in nursing research and scholarship.

Farewell to humanism? Considerations for nursing philosophy and research in posthuman times

(Work in Progress) Resilient Failure Modes of AI Alignment

This project is housed at the Institute of Futures Research and relates to understanding and regularizing challenges to goal and value alignment in artificial intelligent (AI) systems when those systems exhibit nontrivial degrees of behavioral freedom and flexibility, and agency. Of particular concern are resilient failure modes, that is, failure modes that are intractable to methodological or technological resolution, owing to e.g. fundamental conflicts in the underlying ethical theory, or epistemic issues such as persistent ambiguity between the ethical theory, empirical facts, and any world models and policies held by the AI.

I will also be characterizing a resilient failure mode which has not apparently been addressed in the extant literature: misalignment incurred when reasoning and acting from shifting levels of abstraction. An intelligence apparently aligned in its outputs via some mechanism to a state space is not guaranteed to be aligned in the event that state space expands, for instance, through in-context learning or reasoning upon metastatements. This project will motivate, clarify, and formalize this failure mode as it pertains to artificial intelligence systems.

Within the scope of this research project, I am conducting a review of the literature pertaining to artificial intelligence alignment methods and failure modes, epistemological challenges to goal and value alignment, impossibility theorems in population and utilitarian ethics, and the nature of agency as it pertains to artifacts. A nonexhaustive bibliography follows.

I am greatly interested in potential feedback on this project, and suggestions for further reading.

References

Dario Amodei, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, & Dan Mané. (2016). Concrete Problems in AI Safety. https://doi.org/10.48550/arXiv.1606.06565

Peter Eckersley. (2019). Impossibility and Uncertainty Theorems in AI Value Alignment (or why your AGI should not have a utility function). https://doi.org/10.48550/arXiv.1901.00064

Dylan Hadfield-Menell, Anca Dragan, Pieter Abbeel, & Stuart Russell. (2016). Cooperative Inverse Reinforcement Learning. https://doi.org/10.48550/arXiv.1606.03137

Jan Leike, Miljan Martic, Victoria Krakovna, Pedro A. Ortega, Tom Everitt, Andrew Lefrancq, Laurent Orseau, & Shane Legg. (2017). AI Safety Gridworlds. https://doi.org/10.48550/arXiv.1711.09883

Scott McLean, Gemma J. M. Read, Jason Thompson, Chris Baber, Neville A. Stanton & Paul M. Salmon(2023)The risks associated with Artificial General Intelligence: A systematic review,Journal of Experimental & Theoretical Artificial Intelligence,35:5,649-663,DOI: 10.1080/0952813X.2021.1964003

Richard Ngo, Lawrence Chan, & Sören Mindermann. (2023). The alignment problem from a deep learning perspective. https://doi.org/10.48550/arXiv.2209.00626

Petersen, S. (2017). Superintelligence as Superethical. In P. Lin, K. Abney, & R. Jenkins (Eds.), Robot Ethics 2. 0: New Challenges in Philosophy, Law, and Society (pp. 322–337). New York, USA: Oxford University Press.

Max Reuter, & William Schulze. (2023). I’m Afraid I Can’t Do That: Predicting Prompt Refusal in Black-Box Generative Language Models. https://doi.org/10.48550/arXiv.2306.03423

Jonas Schuett, Noemi Dreksler, Markus Anderljung, David McCaffary, Lennart Heim, Emma Bluemke, & Ben Garfinkel. (2023). Towards best practices in AGI safety and governance: A survey of expert opinion. https://doi.org/10.48550/arXiv.2305.07153

Open Ended Learning Team, Adam Stooke, Anuj Mahajan, Catarina Barros, Charlie Deck, Jakob Bauer, Jakub Sygnowski, Maja Trebacz, Max Jaderberg, Michael Mathieu, Nat McAleese, Nathalie Bradley-Schmieg, Nathaniel Wong, Nicolas Porcel, Roberta Raileanu, Steph Hughes-Fitt, Valentin Dalibard, & Wojciech Marian Czarnecki. (2021). Open-Ended Learning Leads to Generally Capable Agents. https://doi.org/10.48550/arXiv.2107.12808

Roman V. Yampolskiy(2014)Utility function security in artificially intelligent agents,Journal of Experimental & Theoretical Artificial Intelligence,26:3,373-389. https://doi.org/10.1080/0952813X.2014.895114

(Featured) Future value change: Identifying realistic possibilities and risks

The advent of rapid technological development has prompted philosophical investigation into the ways in which societal values might adapt or evolve in response to changing circumstances. One such approach is axiological futurism, a discipline that endeavors to anticipate potential shifts in value systems proactively. The research article at hand makes a significant contribution to the developing field of axiological futurism, proposing innovative methods for predicting potential trajectories of value change. This article from Jeroen Hopster underscores the complexity and nuance inherent in such a task, acknowledging the myriad factors influencing the evolution of societal values.

His research presents an interdisciplinary approach to advance axiological futurism, drawing parallels between the philosophy of technology and climate scholarship, two distinct yet surprisingly complementary fields. Both fields, it argues, share an anticipatory nature, characterized by a future orientation and a firm grounding in substantial uncertainty. Notably, the article positions climate science’s sophisticated modelling techniques as instructive for philosophical studies, promoting the use of similar predictive models in axiological futurism. The approach suggested in the article enriches the discourse on futures studies by integrating strategies from climate science and principles from historical moral change, presenting an enlightened perspective on the anticipatory framework.

Theoretical Framework

The theoretical framework of the article is rooted in the concept of axiological possibility spaces, a means to anticipate future moral change based on a deep historical understanding of past transformations in societal values. The researcher proposes that these spaces represent realistic possibilities of value change, where ‘realism’ is a function of historical conditioning. To illustrate, processes of moralisation and demoralisation are considered historical markers that offer predictive insights into future moral transitions. Moralisation is construed as the phenomenon wherein previously neutral or non-moral issues acquire moral significance, while demoralisation refers to the converse. As the research paper posits, these processes are essential to understanding how technology could engender shifts in societal values.

In particular, the research identifies two key factors—technological affordances and the emergence of societal challenges—as instrumental in driving moralisation or demoralisation processes. The author suggests that these factors collectively engender realistic possibilities within the axiological possibility space. Notably, the concept of technological affordances serves to underline how new technologies, by enabling or constraining certain behaviors, can precipitate changes in societal values. On the other hand, societal challenges are posited to stimulate moral transformations in response to shifting social dynamics. Taken together, this theoretical framework stands as an innovative schema for the anticipation of future moral change, thereby contributing to the discourse of axiological futurism.

Axiological Possibility Space and Lessons from Climate Scholarship

The concept of an axiological possibility space, as developed in the research article, operates as a predictive instrument for anticipating future value change in societal norms and morals. This space is not a projection of all hypothetical future moral changes, but rather a compilation of realistic possibilities. The author defines these realistic possibilities as those rooted in the past and present, inextricably tied to the historical conditioning of moral trends. Utilizing historical patterns of moralisation and demoralisation, the author contends that these processes, in concert with the introduction of new technologies and arising societal challenges, provide us with plausible trajectories for future moral change. As such, the axiological possibility space serves as a tool to articulate these historically grounded projections, offering a valuable contribution to the field of anticipatory ethics and, more broadly, to the philosophy of futures studies.

A central insight from the article emerges from the intersection of futures studies and climate scholarship. The author skillfully extracts lessons from the way climate change prediction models operate, particularly the CMIP models utilized by the IPCC, and their subsequent shortcomings in the face of substantial uncertainty. The idea that the intricacies of predictive modeling can sometimes overshadow the focus on potentially disastrous outcomes is critically assessed. The author contends that the realm of axiological futurism could face similar issues and hence should take heed. Notably, the call for a shift from prediction-centric frameworks to a scenario approach that can articulate the spectrum of realistic possibilities is emphasized. This scenario approach, currently being developed in climate science under the term “storyline approach,” underlines the importance of compound risks and maintains a robust focus on potentially high-impact events. The author suggests that the axiological futurist could profitably adopt a similar strategy, exploring value change in technomoral scenarios, to successfully navigate the deep uncertainties intrinsic to predicting future moral norms.

Integration into Practical Fields and Relating to Broader Philosophical Discourse

The transfer of the theoretical discussion into pragmatic fields is achieved in the research with a thoughtful examination of its potential applications, primarily in value-sensitive design. By suggesting a need for engineers to take into consideration the dynamics of moralisation and demoralisation, the author not only proposes a shift in perspective, but also creates a bridge between theoretical discourse and practical implementation. Importantly, it is argued that a future-proof design requires an assessment of the probability of embedded values shifting in moral significance over time. The research paper goes further, introducing a risk-based approach to the design process, where engineers should not merely identify likely value changes but rather seek out those changes that render the design most vulnerable from a moral perspective. The mitigation of these high-risk value changes then becomes a priority in design adaptation, solidifying the article’s argument that axiological futurism is an essential tool in technological development.

The author’s analysis also presents a substantial contribution to the broader philosophical discourse, notably the philosophy of futures studies and the ethics of technology. By integrating concepts from climatology and axiology, the work demonstrates an interdisciplinary approach that enriches philosophical discourse, emphasizing how diverse scientific fields can illuminate complex ethical issues in technology. Importantly, the work builds on and critiques the ideas of prominent thinkers like John Danaher, pushing for a more diversified and pragmatic approach in axiological futurism, rather than a singular reliance on model-based projections. The research also introduces the critical notion of “realistic possibilities” into the discourse, enriching our understanding of anticipatory ethics. It advocates for a shift in focus towards salient normative risks, drawing parallels to climate change scholarship and highlighting the necessity for anticipatory endeavours to be both scientifically plausible and ethically insightful. This approach has potential for a significant impact on philosophical studies concerning value change and the ethical implications of future technologies.

Future Research Directions

The study furnishes ample opportunities for future research in the philosophy of futures studies, particularly concerning the integration of its insights into practical fields and its implications for anticipatory ethics. The author’s exploration of axiological possibility spaces remains an open-ended endeavor; further work could be conducted to investigate the specific criteria or heuristic models that could guide ethical assessments within these spaces. The potential application of these concepts in different technological domains, beyond AI and climate change, also presents an inviting avenue of inquiry. Moreover, as the author has adopted lessons from climate scholarship, similar interdisciplinary approaches could be employed to incorporate insights from other scientific disciplines. Perhaps most intriguingly, the research introduces a call for a critical exploration of “realistic possibilities,” an area that is ripe for in-depth theoretical and empirical examination. Future research could build upon this foundational concept, investigating its broader implications, refining its methodological underpinnings, and exploring its potential impact on policy making and technological design.

Abstract

The co-shaping of technology and values is a topic of increasing interest among philosophers of technology. Part of this interest pertains to anticipating future value change, or what Danaher (2021) calls the investigation of ‘axiological futurism’. However, this investigation faces a challenge: ‘axiological possibility space’ is vast, and we currently lack a clear account of how this space should be demarcated. It stands to reason that speculations about how values might change over time should exclude farfetched possibilities and be restricted to possibilities that can be dubbed realistic. But what does this realism criterion entail? This article introduces the notion of ‘realistic possibilities’ as a key conceptual advancement to the study of axiological futurism and offers suggestions as to how realistic possibilities of future value change might be identified. Additionally, two slight modifications to the approach of axiological futurism are proposed. First, axiological futurism can benefit from a more thoroughly historicized understanding of moral change. Secondly, when employed in service of normative aims, the axiological futurist should pay specific attention to identifying realistic possibilities that come with substantial normative risks.

Future value change: Identifying realistic possibilities and risks

(Featured) The Ethics of Terminology: Can We Use Human Terms to Describe AI?

The philosophical discourse on artificial intelligence (AI) often negotiates the boundary of the human-anthropocentric worldview, pivoting around the use of human attributes to describe and assess AI. In this context, the research article by Ophelia Deroy presents a compelling inquiry into our linguistic and cognitive tendency to ascribe human characteristics, particularly “trustworthiness,” to artificial entities. In an attempt to unravel the philosophical implications and ramifications of this anthropomorphism, the author explores three conceptual frameworks – new ontological category, extended human-category, and Deroy’s semi-propositional beliefs. The divergence among these perspectives underscores the complexity of the issue, highlighting how our conceptions of AI shape our interactions with and attitudes towards it.

In addition to ontological and communicative aspects, the article scrutinizes the legal dimension of AI personhood. It analyzes the merits and shortcomings of the legal argument for ascribing personhood to AI, juxtaposing it with the established notion of corporate personhood. Although this comparison offers certain pragmatic and epistemic advantages, it does not unequivocally endorse the uncritical application of human terminology to AI. Through this multi-faceted analysis, the research article integrates perspectives from philosophy, cognitive science, and law, extending the ongoing discourse about AI into uncharted territories. The examination of AI within this framework thus emerges as an indispensable part of philosophical futures studies.

Understanding Folk Concepts of AI

The exploration of folk concepts of AI is critical in understanding how people conceive and interpret artificial intelligence within their worldview. Ophelia Deroy meticulously dissects these concepts by challenging the prevalent ascription of ‘trustworthiness’ to AI. The article emphasizes the potential mismatch between our cognitive conception of trust in humans and the attributes usually associated with AI, such as reliability or predictability. The focus is not only on the logical inconsistencies of such anthropomorphic attributions but also on the potential for miscommunication they could engender, especially given the complexity and variability of the term ‘trustworthiness’ across cultures and languages.

The author employs an interesting analytical angle by exploring the notion of AI as a possible extension of the human category, or alternatively, as a distinct ontological category. The question at hand is whether people perceive AI as fundamentally different from humans or merely view them as extreme non-prototypical cases of humans. This consideration reflects the complex cognitive landscape we navigate when dealing with AI, pointing towards the potential ontological ambiguity surrounding AI. Understanding these folk concepts and the mental models they reflect not only enriches our comprehension of AI from a sociocultural perspective but also yields important insights for the development and communication strategies of AI technologies.

Human Terms and their Implications, Legal Argument

The linguistic choice of using human terms such as “trustworthiness” to describe AI, arguably entrenched in anthropocentric reasoning, poses substantial problems. The author identifies three interpretations of how people categorize AI: an extension of the human category, a distinct ontological category, or a semi-propositional belief akin to religious or spiritual constructs. This last interpretation is particularly illuminating, suggesting that people might hold inconsistent beliefs about AI without considering them irrational. This offers a crucial insight into how human language shapes our understanding and discourse about AI, potentially fostering misconceptions. Yet, the author points out, there is a lack of empirical evidence supporting the appropriateness of applying such human-centric terms to AI, raising questions about the legitimacy of this linguistic practice in both scientific and broader public contexts.

In the discussion of AI’s anthropomorphic portrayal, Deroy introduces a compelling legal perspective. Drawing parallels with the legal status granted to non-human entities like corporations, the author investigates whether AI could be treated as a “legal person,” a concept that could reconcile the use of human terms in AI discourse. However, this argument presents its own set of challenges and limitations. The text using such terms must clearly state that the analogical use of “trust” is with respect to legal persons and not actual persons, a nuance often overlooked in many texts. Moreover, the justification for using such legal fiction must weigh the potential benefits against possible costs or risks, a task best left to legal experts. Thus, despite its merits, the legal argument does not provide an unproblematic justification for humanizing AI discourse.

The Broader Philosophical Discourse and Future Directions

This study is an important contribution to the broader philosophical discourse, illuminating the intersection of linguistics, ethics, and futures studies. The argument challenges the conventional notion of language as a neutral medium, stressing the normative power of language in shaping societal perception of AI. This aligns with the poststructuralist argument that reality is socially constructed, extending it to a technological context. The insight that folk concepts, embedded in language, influence our collective vision of AI’s role echoes phenomenological philosophies which underscore the role of intersubjectivity in shaping our shared reality. The ethical implications arising from the anthropomorphic portrayal of AI resonate with moral philosophy, particularly debates on moral agency and personhood. Thus, this study reinforces the growing realization that philosophical reflections are integral to our navigation of an increasingly AI-infused future.

Furthermore, the research points towards several promising avenues for future investigation. The most apparent is an extension of this study across diverse cultures and languages to explore how varying linguistic contexts may shape differing conceptions of AI, revealing cultural variations in anthropomorphizing technology. A comparative study might yield valuable insights into the societal implications of folk concepts across the globe. Additionally, an exploration into the real-world impact of anthropomorphic language in AI discourse, such as its effects on policy-making and public sentiment towards AI, would be an enlightening sequel. Lastly, this work paves the way for developing an ethical framework to guide the linguistic portrayal of AI in public discourse, a timely topic given the accelerating integration of AI into our daily lives. Thus, this research sets a fertile ground for multidisciplinary inquiries into linguistics, sociology, ethics, and futures studies.

Abstract

Despite facing significant criticism for assigning human-like characteristics to artificial intelligence, phrases like “trustworthy AI” are still commonly used in official documents and ethical guidelines. It is essential to consider why institutions continue to use these phrases, even though they are controversial. This article critically evaluates various reasons for using these terms, including ontological, legal, communicative, and psychological arguments. All these justifications share the common feature of trying to justify the official use of terms like “trustworthy AI” by appealing to the need to reflect pre-existing facts, be it the ontological status, ways of representing AI or legal categories. The article challenges the justifications for these linguistic practices observed in the field of AI ethics and AI science communication. In particular, it takes aim at two main arguments. The first is the notion that ethical discourse can move forward without the need for philosophical clarification, bypassing existing debates. The second justification argues that it’s acceptable to use anthropomorphic terms because they are consistent with the common concepts of AI held by non-experts—exaggerating this time the existing evidence and ignoring the possibility that folk beliefs about AI are not consistent and come closer to semi-propositional beliefs. The article sounds a strong warning against the use of human-centric language when discussing AI, both in terms of principle and the potential consequences. It argues that the use of such terminology risks shaping public opinion in ways that could have negative outcomes.

The Ethics of Terminology: Can We Use Human Terms to Describe AI?

(Featured) Predictive policing and algorithmic fairness

Tzu-Wei Hung and Chun-Ping Yen contribute to the discursive field of predictive policing algorithms (PPAs) and their intersection with structural discrimination. They examine the functioning of PPAs, and lay bare their potential for propagating existing biases in policing practices and thereby question the presumed neutrality of technological interventions in law enforcement. Their investigation underscores the technological manifestation of structural injustices, adding a critical dimension to our understanding of the relationship between modern predictive technologies and societal equity.

An essential aspect of the authors’ argument is the proposition that the root of the problem lies not in the predictive algorithms themselves, but in the biased actions and unjust social structures that shape their application. Their article places this contention within the broader philosophical context, emphasizing the often-overlooked social and political underpinnings of technological systems. Thus, it offers a pertinent contribution to futures studies, prompting a more nuanced understanding of the interplay between (hotly anticipated) advanced technologies like PPAs and the structural realities of societal injustice. The authors provide a robust challenge to deterministic narratives around technology, pointing to the integral role of societal context in determining the impact of predictive policing systems.

Conceptualizing Predictive Policing

Hung and Yen Scrutinize the correlation between data inputs, algorithmic design, and resultant predictions. Their analysis disrupts the popular conception of PPAs as inherently objective and unproblematic, instead illuminating the mechanisms by which structural biases can be inadvertently incorporated and perpetuated through these algorithmic systems. The article’s critical scrutiny of PPAs further elucidates the relational dynamics between data, predictive modeling, and the societal contexts in which they are deployed.

The authors advance the argument that the implications of PPAs extend beyond individual acts of discrimination to reinforce broader systems of structural bias and social injustice. By focusing on the role of PPAs in reproducing existing patterns of discrimination, they elevate the discussion beyond a simplistic focus on technological neutrality or objectivity, situating PPAs within a larger discourse on technological complicity in the perpetuation of social injustices. This perspective fundamentally challenges conventional thinking about PPAs, prompting a shift from an algorithm-centric view to one that acknowledges the socio-political realities that shape and are shaped by these technological systems.

Structural Discrimination, Predictive Policing, and Theoretical Frameworks

The study goes further in its analysis by arguing that discrimination perpetuated through PPAs is, in essence, a manifestation of broader structural discrimination within societal systems. This perspective illuminates the connections between predictive policing and systemic power imbalances, rendering visible the complex ways in which PPAs can reify and intensify existing social injustices. The authors critically underline the potentially negative impact of stakeholder involvement in predictive policing, postulating that equal participation may unintentionally replicate or amplify pre-existing injustices. The analysis posits that the sources of discrimination lie in biased police actions reflecting broader societal inequities rather than the algorithmic systems themselves. Hence, addressing these challenges necessitates a focus not merely on rectifying algorithmic anomalies, but on transforming the unjust structures that they echo.

The authors propose a transformative theoretical framework, referred to as the social safety net schema, which envisions PPAs as integrated within a broader social safety net. This schema reframes the purpose and functioning of PPAs, advocating for their use not to penalize but to predict social vulnerabilities and facilitate requisite assistance. This is a paradigm shift from crime-focused approaches to a welfare-oriented model that situates crime within socio-economic structures. In this schema, the role of predictive policing is reimagined, with crime predictions used as indicators of systemic inequities that necessitate targeted interventions and redistribution of resources. With this reorientation, predictive policing becomes a tool for unveiling societal disparities and assisting in welfare improvement. The implementation of this schema implies a commitment to equity rather than just equality, addressing the nuances and complexities of social realities and aiming at the underlying structures fostering discrimination.

Community and Stakeholder Involvement, and Implications for Future Research

The issue of stakeholder involvement is addressed with both depth and nuance. Acknowledging the criticality of involving diverse stakeholders in the governance and control of predictive policing technology, the authors assert that equal participation could inadvertently reproduce the extant societal disparities. In their view, a stronger representation of underrepresented groups in decision-making processes is vital. This necessitates more resources and mechanisms to ensure their voices are heard and acknowledged in shaping public policies and social structures. The role of local communities in this process is paramount; they act as informed advocates, ensuring the proper understanding and representation of disadvantaged groups. This framework, hence, pivots on a bottom-up approach to power and control over policing, ensuring democratic community control and fostering collective efficacy. The approach is envisioned to counterbalance the persisting inequality, thereby reducing the likelihood of discrimination and improving community control over policing.

The analysis brings forth notable implications for future academic inquiries and policy-making. It endorses the importance of scrutiny of social structures rather than the predictive algorithms themselves as the catalyst for discriminatory practices in predictive policing. This view drives the necessity of further research into the multifaceted intersection between social structures, law enforcement, and advanced predictive technologies. Moreover, it prompts consideration of how policies can be implemented to reflect this understanding, centering on creating a socially aware and equitable technological governance structure. The policy schema of the social safety net for predictive policing, as proposed by the authors, offers a starting point for such a discourse. Future research may focus on implementing and testing this schema, critically examining its effectiveness in mitigating discriminatory impacts of predictive policing, and identifying potential adjustments necessary for enhancing its efficiency and inclusivity. In essence, future inquiries and policy revisions should foster a context-sensitive, democratic, and community-focused approach to predictive policing.

Abstract

This paper examines racial discrimination and algorithmic bias in predictive policing algorithms (PPAs), an emerging technology designed to predict threats and suggest solutions in law enforcement. We first describe what discrimination is in a case study of Chicago’s PPA. We then explain their causes with Broadbent’s contrastive model of causation and causal diagrams. Based on the cognitive science literature, we also explain why fairness is not an objective truth discoverable in laboratories but has context-sensitive social meanings that need to be negotiated through democratic processes. With the above analysis, we next predict why some recommendations given in the bias reduction literature are not as effective as expected. Unlike the cliché highlighting equal participation for all stakeholders in predictive policing, we emphasize power structures to avoid hermeneutical lacunae. Finally, we aim to control PPA discrimination by proposing a governance solution—a framework of a social safety net.

Predictive policing and algorithmic fairness

(Featured) Cognitive architectures for artificial intelligence ethics

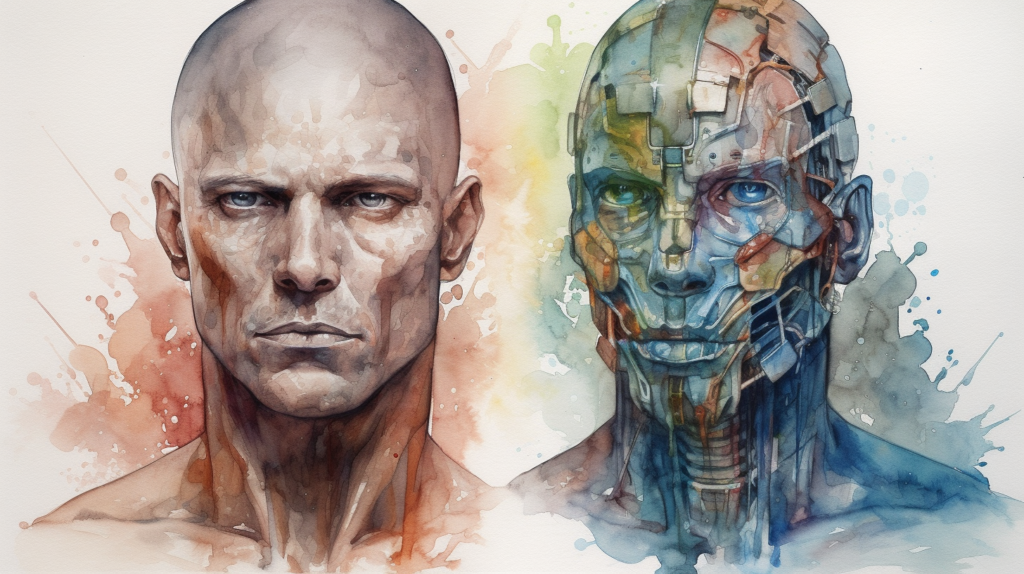

The landscape of artificial intelligence (AI) is a complex and rapidly evolving field, one that increasingly intersects with ethical, philosophical, and societal considerations. The role of AI in shaping our future is now largely uncontested, with potential applications spanning an array of sectors from healthcare to education, logistics to creative industries. Of particular interest, however, is not merely the surface-level functionality of these AI systems, but the cognitive architectures underpinning them. Cognitive architectures, a theoretical blueprint for cognitive and intelligent behavior, essentially dictate how AI systems perceive, think, and act. They therefore represent a foundational aspect of AI design and hold substantial implications for how AI systems will interact with, and potentially transform, our broader societal structures.

Yet, the discourse surrounding these architectures is, to a large extent, bifurcated between two paradigms: the biological cognitive architecture and the functional cognitive architecture. The biological paradigm, primarily drawing from neuroscience and biology, emphasizes replicating the cognitive processes of the human brain. On the other hand, the functional paradigm, rooted more in computer science and engineering, is concerned with designing efficient systems capable of executing cognitive tasks, regardless of whether they emulate human cognitive processes. This fundamental divergence in design philosophy thus embodies distinct assumptions about the nature of cognition and intelligence, consequently shaping the way AI systems are created and how they might impact society. It is these paradigms, their implications, and their interplay with AI ethics principles, that form the main themes of this essay.

Frameworks for Understanding Cognitive Architectures and the Role of Mental Models in AI Design

Cognitive architectures, central to the progression of artificial intelligence, encapsulate the fundamental rules and structures that drive the operation of an intelligent agent. The research article situates its discussion within two dominant theoretical frameworks: symbolic and connectionist cognitive architectures. Symbolic cognitive architectures, rooted in the realm of logic and explicit representation, emphasize rule-based systems and algorithms. They are typified by their capacity for discrete, structured reasoning, often relating to high-level cognitive functions such as planning and problem-solving. This structured approach carries the advantage of interpretability, affording clearer insights into the decision-making processes.

On the other hand, connectionist cognitive architectures embody a divergent perspective, deriving their inspiration from biological neural networks. Connectionist models prioritize emergent behavior and learning from experience, expressed in the form of neural networks that adjust synaptic weights based on input. These architectures have exhibited exceptional performance in pattern recognition and adaptive learning scenarios. However, their opaque, ‘black-box’ nature presents challenges to understanding and predicting their behavior. The interplay between these two models, symbolizing the tension between the transparent but rigid symbolic approach and the flexible but opaque connectionist approach, forms the foundation upon which contemporary discussions of cognitive architectures in AI rest.

The incorporation of mental models in AI design represents a nexus where philosophical interpretations of cognition intersect with computational practicalities. The use of mental models, i.e., internal representations of the world and its operational mechanisms, is a significant bridge between biological and functional cognitive architectures. This highlights the philosophical significance of mental models in the study of AI design: they reflect the complex interplay between the reality we perceive and the reality we construct. The efficacy of mental models in AI system design underscores their pivotal role in knowledge acquisition and problem-solving. In the biological cognitive framework, mental models mimic human cognition’s non-linear, associative, and adaptive nature, thereby conforming to the cognitive isomorphism principle. On the other hand, the functional cognitive framework employs mental models as pragmatic tools for efficient task execution, demonstrating a utilitarian approach to cognition. Thus, the role of mental models in AI design serves as a litmus test for the philosophical assumptions underlying distinct cognitive architectures.

Philosophical Reflections and AI Ethics Principles in Relation to Cognitive Architectures

AI ethics principles, primarily those concerning autonomy, beneficence, and justice, possess substantial implications for the understanding and application of cognitive architectures. If we consider the biological framework, ethical considerations significantly arise concerning the autonomy and agency of AI systems. To what extent can, or should, an AI system with a human-like cognitive structure make independent decisions? The principle of beneficence—commitment to do good and prevent harm—profoundly impacts the design of functional cognitive architectures. Here, a tension surfaces between the utilitarian goal of optimized task execution and the prevention of potential harm resulting from such single-mindedness. Meanwhile, the principle of justice—fairness in the distribution of benefits and burdens—prompts critical scrutiny of the societal consequences of both architectures. As these models become more prevalent, we must continuously ask: Who benefits from these technologies, and who bears the potential harms? Consequently, the intricate intertwining of AI ethics principles with cognitive architectures brings philosophical discourse to the forefront of AI development, establishing its pivotal role in shaping the future of artificial cognition.

The philosophical discourse surrounding AI and cognitive architectures is deeply entwined with the ethical, ontological, and epistemological considerations inherent to AI design. On an ethical level, the discourse probes the societal implications of these technologies and the moral responsibilities of their developers. The questions of what AI is and what it could be—an ontological debate—become pressing as cognitive architectures increasingly mimic the complexities of the human mind. Furthermore, the epistemological dimension of this discourse explores the nature of AI’s knowledge acquisition and decision-making processes. This discourse, therefore, cannot be separated from the technological progression of AI, as the philosophical issues at play directly inform the design choices made. Thus, philosophical reflections are not merely theoretical musings but tangible influences on the future of AI and, by extension, society. As AI continues to evolve, the ongoing dialogue between philosophy and technology will be critical in guiding its development towards beneficial and ethical ends.

Future Directions for Research

Considering the rapid advancement of AI, cognitive architectures, and their deep-rooted philosophical implications, potential avenues for future research appear vast and multidimensional. It would be valuable to delve deeper into the empirical examination of cognitive architectures’ impact on decision-making processes in AI, quantitatively exploring their effect on AI reliability and behavior. A comparative study across different cognitive architecture models, analyzing their benefits and drawbacks in diverse real-world contexts, would further enrich the understanding of their practical applications. As ethical considerations take center stage, research exploring the development and implementation of ethical guidelines specific to cognitive architectures is essential. Notably, studies addressing the question of how to efficiently integrate philosophical perspectives into the technical development process could be transformative. Furthermore, in this era of advancing AI technologies, maintaining a dialogue between the technologists and the philosophers is crucial; thus, fostering interdisciplinary collaborations between AI research and philosophy should be a high priority in future research agendas.

Abstract

As artificial intelligence (AI) thrives and propagates through modern life, a key question to ask is how to include humans in future AI? Despite human involvement at every stage of the production process from conception and design through to implementation, modern AI is still often criticized for its “black box” characteristics. Sometimes, we do not know what really goes on inside or how and why certain conclusions are met. Future AI will face many dilemmas and ethical issues unforeseen by their creators beyond those commonly discussed (e.g., trolley problems and variants of it) and to which solutions cannot be hard-coded and are often still up for debate. Given the sensitivity of such social and ethical dilemmas and the implications of these for human society at large, when and if our AI make the “wrong” choice we need to understand how they got there in order to make corrections and prevent recurrences. This is particularly true in situations where human livelihoods are at stake (e.g., health, well-being, finance, law) or when major individual or household decisions are taken. Doing so requires opening up the “black box” of AI; especially as they act, interact, and adapt in a human world and how they interact with other AI in this world. In this article, we argue for the application of cognitive architectures for ethical AI. In particular, for their potential contributions to AI transparency, explainability, and accountability. We need to understand how our AI get to the solutions they do, and we should seek to do this on a deeper level in terms of the machine-equivalents of motivations, attitudes, values, and so on. The path to future AI is long and winding but it could arrive faster than we think. In order to harness the positive potential outcomes of AI for humans and society (and avoid the negatives), we need to understand AI more fully in the first place and we expect this will simultaneously contribute towards greater understanding of their human counterparts also.

Cognitive architectures for artificial intelligence ethics