(Relevant Literature) Philosophy of Futures Studies: July 16th, 2023 – July 22nd, 2023

(Relevant Literature) Philosophy of Futures Studies: July 9th, 2023 – July 15th, 2023

Should Artificial Intelligence be used to support clinical ethical decision-making? A systematic review of reasons

Abstract

Should Artificial Intelligence be used to support clinical ethical decision-making? A systematic review of reasons

“Healthcare providers have to make ethically complex clinical decisions which may be a source of stress. Researchers have recently introduced Artificial Intelligence (AI)-based applications to assist in clinical ethical decision-making. However, the use of such tools is controversial. This review aims to provide a comprehensive overview of the reasons given in the academic literature for and against their use.”

Do Large Language Models Know What Humans Know?

Abstract

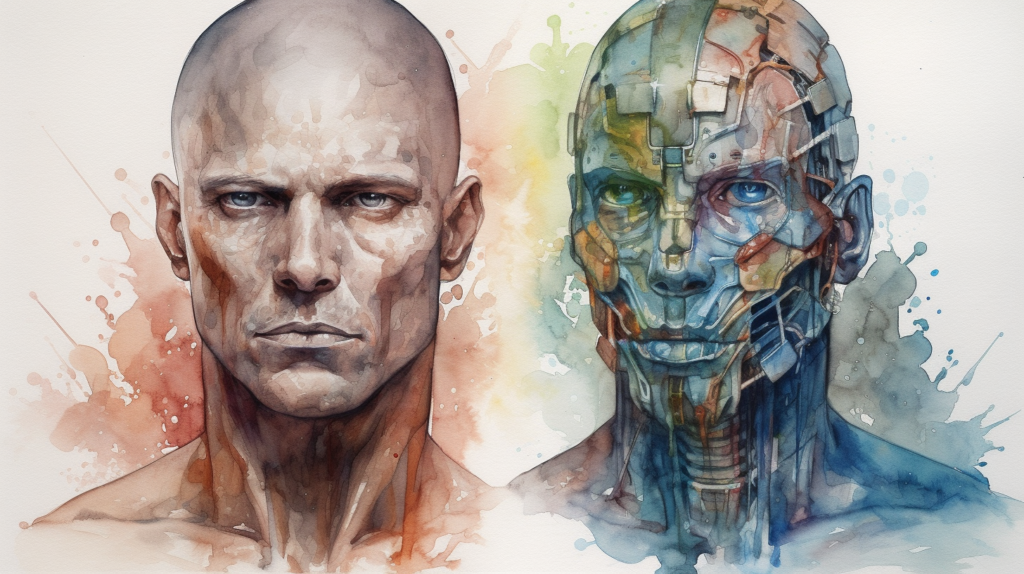

Do Large Language Models Know What Humans Know?

“Humans can attribute beliefs to others. However, it is unknown to what extent this ability results from an innate biological endowment or from experience accrued through child development, particularly exposure to language describing others’ mental states. We test the viability of the language exposure hypothesis by assessing whether models exposed to large quantities of human language display sensitivity to the implied knowledge states of characters in written passages. In pre-registered analyses, we present a linguistic version of the False Belief Task to both human participants and a large language model, GPT-3. Both are sensitive to others’ beliefs, but while the language model significantly exceeds chance behavior, it does not perform as well as the humans nor does it explain the full extent of their behavior—despite being exposed to more language than a human would in a lifetime. This suggests that while statistical learning from language exposure may in part explain how humans develop the ability to reason about the mental states of others, other mechanisms are also responsible.”

Scientific understanding through big data: From ignorance to insights to understanding

Abstract

Scientific understanding through big data: From ignorance to insights to understanding

“Here I argue that scientists can achieve some understanding of both the products of big data implementation as well as of the target phenomenon to which they are expected to refer –even when these products were obtained through essentially epistemically opaque processes. The general aim of the paper is to provide a road map for how this is done; going from the use of big data to epistemic opacity (Sec. 2), from epistemic opacity to ignorance (Sec. 3), from ignorance to insights (Sec. 4), and finally, from insights to understanding (Sec. 5, 6).”

Ethics of Quantum Computing: an Outline

Abstract

Ethics of Quantum Computing: an Outline

“This paper intends to contribute to the emerging literature on the ethical problems posed by quantum computing and quantum technologies in general. The key ethical questions are as follows: Does quantum computing pose new ethical problems, or are those raised by quantum computing just a different version of the same ethical problems raised by other technologies, such as nanotechnologies, nuclear plants, or cloud computing? In other words, what is new in quantum computing from an ethical point of view? The paper aims to answer these two questions by (a) developing an analysis of the existing literature on the ethical and social aspects of quantum computing and (b) identifying and analyzing the main ethical problems posed by quantum computing. The conclusion is that quantum computing poses completely new ethical issues that require new conceptual tools and methods.”

On The Social Complexity of Neurotechnology: Designing A Futures Workshop For The Exploration of More Just Alternative Futures

Abstract

On The Social Complexity of Neurotechnology: Designing A Futures Workshop For The Exploration of More Just Alternative Futures

Novel technologies like artificial intelligence or neurotechnology are expected to have social implications in the future. As they are in the early stages of development, it is challenging to identify potential negative impacts that they might have on society. Typically, assessing these effects relies on experts, and while this is essential, there is also a need for the active participation of the wider public, as they might also contribute relevant ideas that must be taken into consideration. This article introduces an educational futures workshop called Spark More Just Futures, designed to act as a tool for stimulating critical thinking from a social justice perspective based on the Capability Approach. To do so, we first explore the theoretical background of neurotechnology, social justice, and existing proposals that assess the social implications of technology and are based on the Capability Approach. Then, we present a general framework, tools, and the workshop structure. Finally, we present the results obtained from two slightly different versions (4 and 5) of the workshop. Such results led us to conclude that the designed workshop succeeded in its primary objective, as it enabled participants to discuss the social implications of neurotechnology, and it also widened the social perspective of an expert who participated. However, the workshop could be further improved.

Misunderstandings around Posthumanism. Lost in Translation? Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto”

Abstract

Misunderstandings around Posthumanism. Lost in Translation? Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto”

Posthumanism is still a largely debated new field of contemporary philosophy that mainly aims at broadening the Humanist perspective. Academics, researchers, scientists, and artists are constantly transforming and evolving theories and arguments, around the existing streams of Posthumanist thought, Critical Posthumanism, Transhumanism, Metahumanism, discussing whether they can finally integrate or follow completely different paths towards completely new directions. This paper, written for the 1st Metahuman Futures Forum (Lesvos 2022) will focus on Metahumanism and Jaime del Val’s “Metahuman Futures Manifesto” (2022) mainly as an open dialogue with Critical Posthumanism.

IMAGINABLE FUTURES: A Psychosocial Study On Future Expectations And Anthropocene

Abstract

IMAGINABLE FUTURES: A Psychosocial Study On Future Expectations And Anthropocene

The future has become the central time of Anthropocene due to multiple factors like climate crisis emergence, war, and COVID times. As a social construction, time brings a diversity of meanings, measures, and concepts permeating all human relations. The concept of time can be studies in a variety of fields, but in Social Psychology, time is the bond for all social relations. To understand Imaginable Futures as narratives that permeate human relations requires the discussion of how individuals are imagining, anticipating, and expecting the future. According to Kable et al. (2021), imagining future events activates two brain networks. One, which focuses on creating the new event within imagination, whereas the other evaluates whether the event is positive or negative. To further investigate this process, a survey with 40 questions was elaborated and applied to 312 individuals across all continents. The results show a relevant rupture between individual and global futures. Data also demonstrates that the future is an important asset of the now, and participants are not so optimistic about it. It is possible to notice a growing preoccupation with the global future and the uses of technology.

Taking AI risks seriously: a new assessment model for the AI Act

Abstract

Taking AI risks seriously: a new assessment model for the AI Act

“The EU Artificial Intelligence Act (AIA) defines four risk categories: unacceptable, high, limited, and minimal. However, as these categories statically depend on broad fields of application of AI, the risk magnitude may be wrongly estimated, and the AIA may not be enforced effectively. This problem is particularly challenging when it comes to regulating general-purpose AI (GPAI), which has versatile and often unpredictable applications. Recent amendments to the compromise text, though introducing context-specific assessments, remain insufficient. To address this, we propose applying the risk categories to specific AI scenarios, rather than solely to fields of application, using a risk assessment model that integrates the AIA with the risk approach arising from the Intergovernmental Panel on Climate Change (IPCC) and related literature. This integrated model enables the estimation of AI risk magnitude by considering the interaction between (a) risk determinants, (b) individual drivers of determinants, and (c) multiple risk types. We illustrate this model using large language models (LLMs) as an example.”

Creating a large language model of a philosopher

Abstract

Creating a large language model of a philosopher

“Can large language models produce expert-quality philosophical texts? To investigate this, we fine-tuned GPT-3 with the works of philosopher Daniel Dennett. To evaluate the model, we asked the real Dennett 10 philosophical questions and then posed the same questions to the language model, collecting four responses for each question without cherry-picking. Experts on Dennett’s work succeeded at distinguishing the Dennett-generated and machine-generated answers above chance but substantially short of our expectations. Philosophy blog readers performed similarly to the experts, while ordinary research participants were near chance distinguishing GPT-3’s responses from those of an ‘actual human philosopher’.”